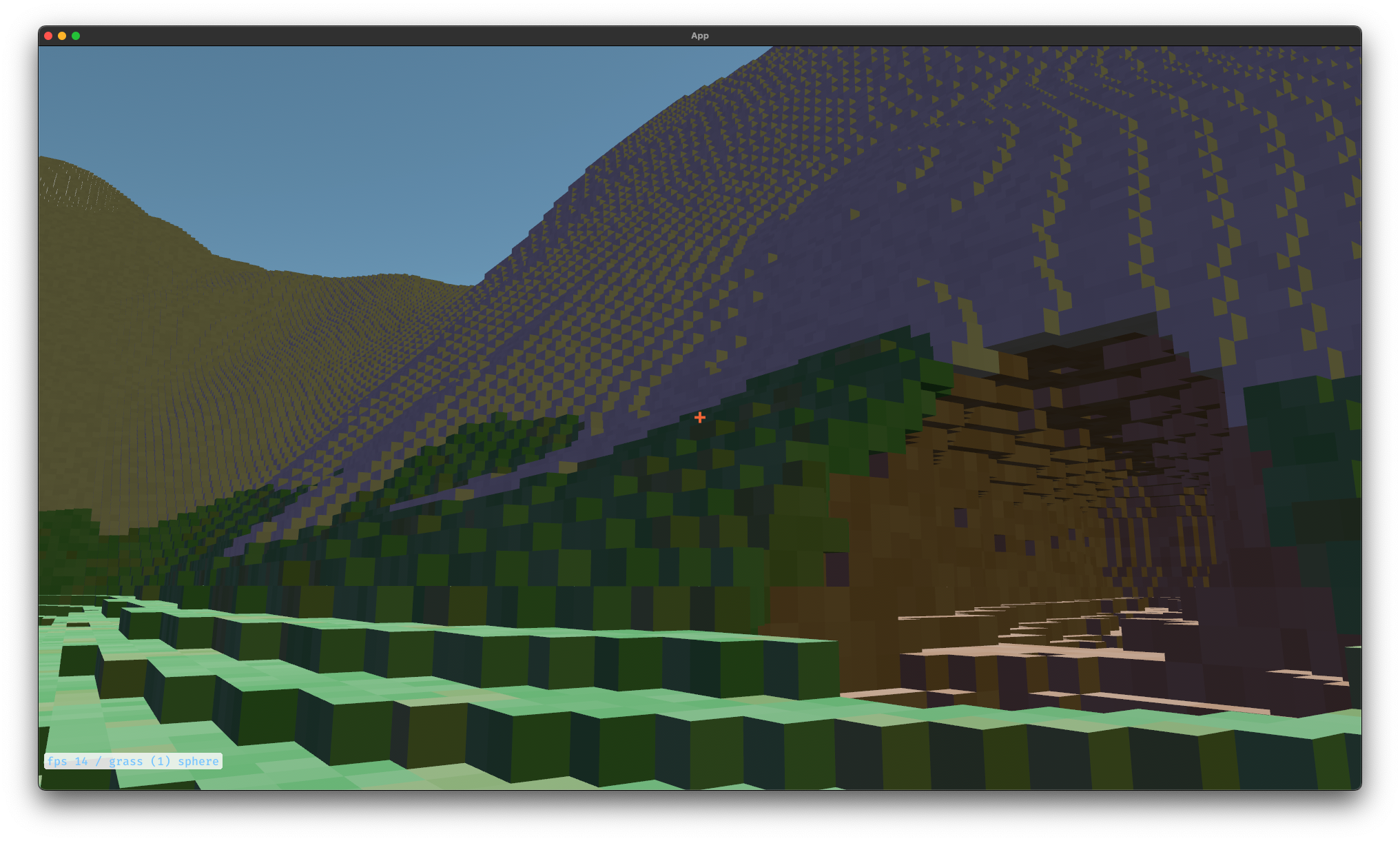

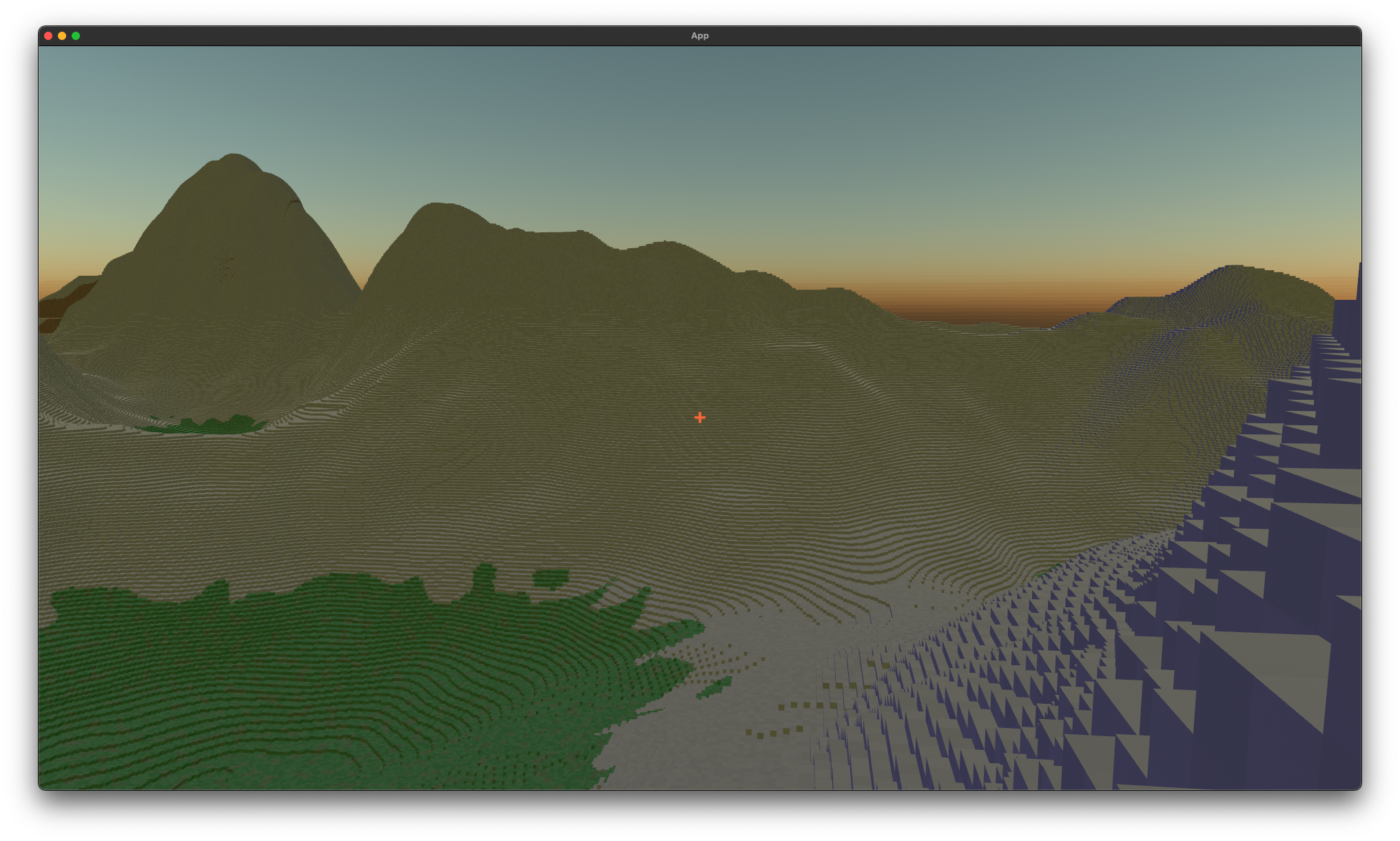

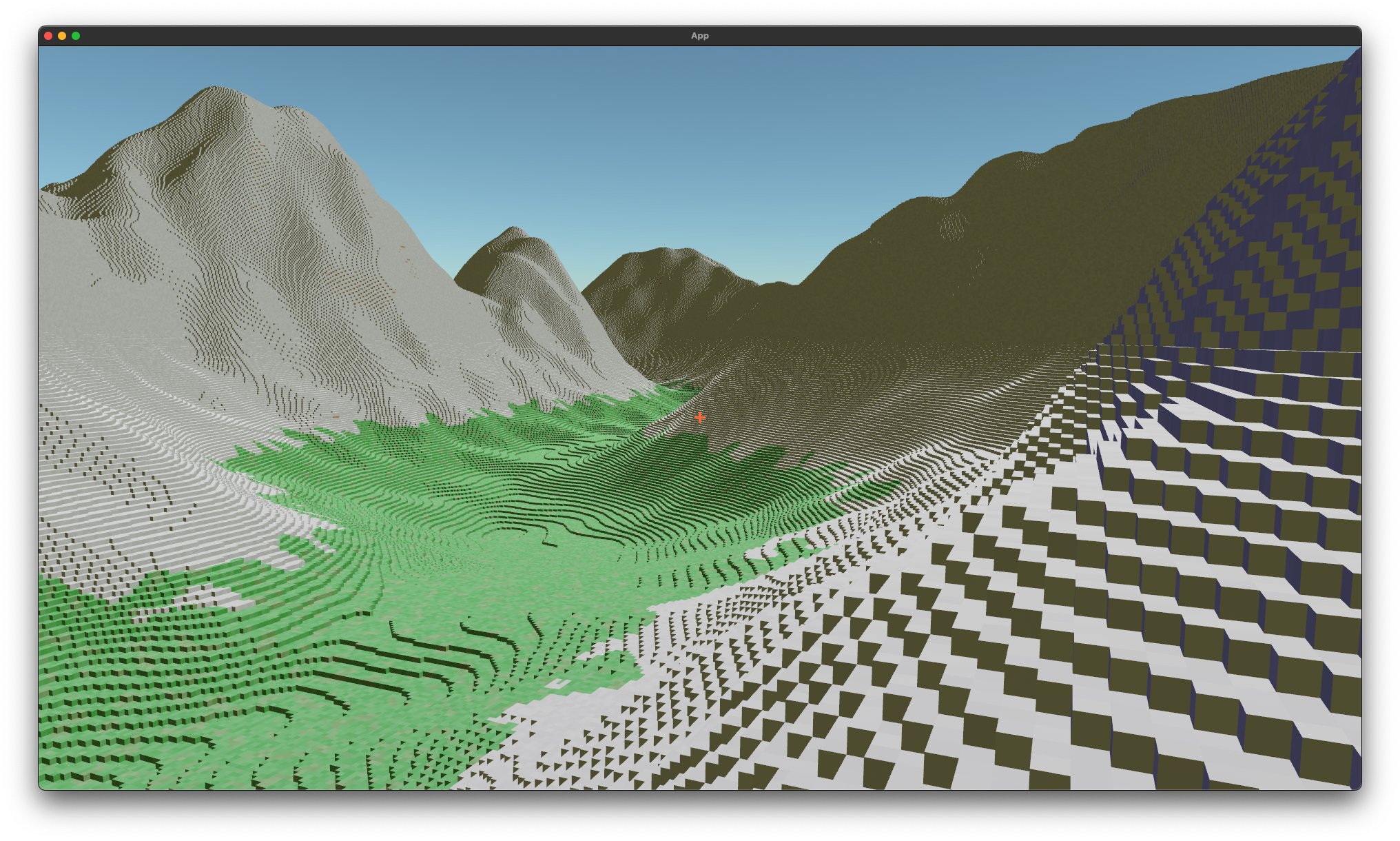

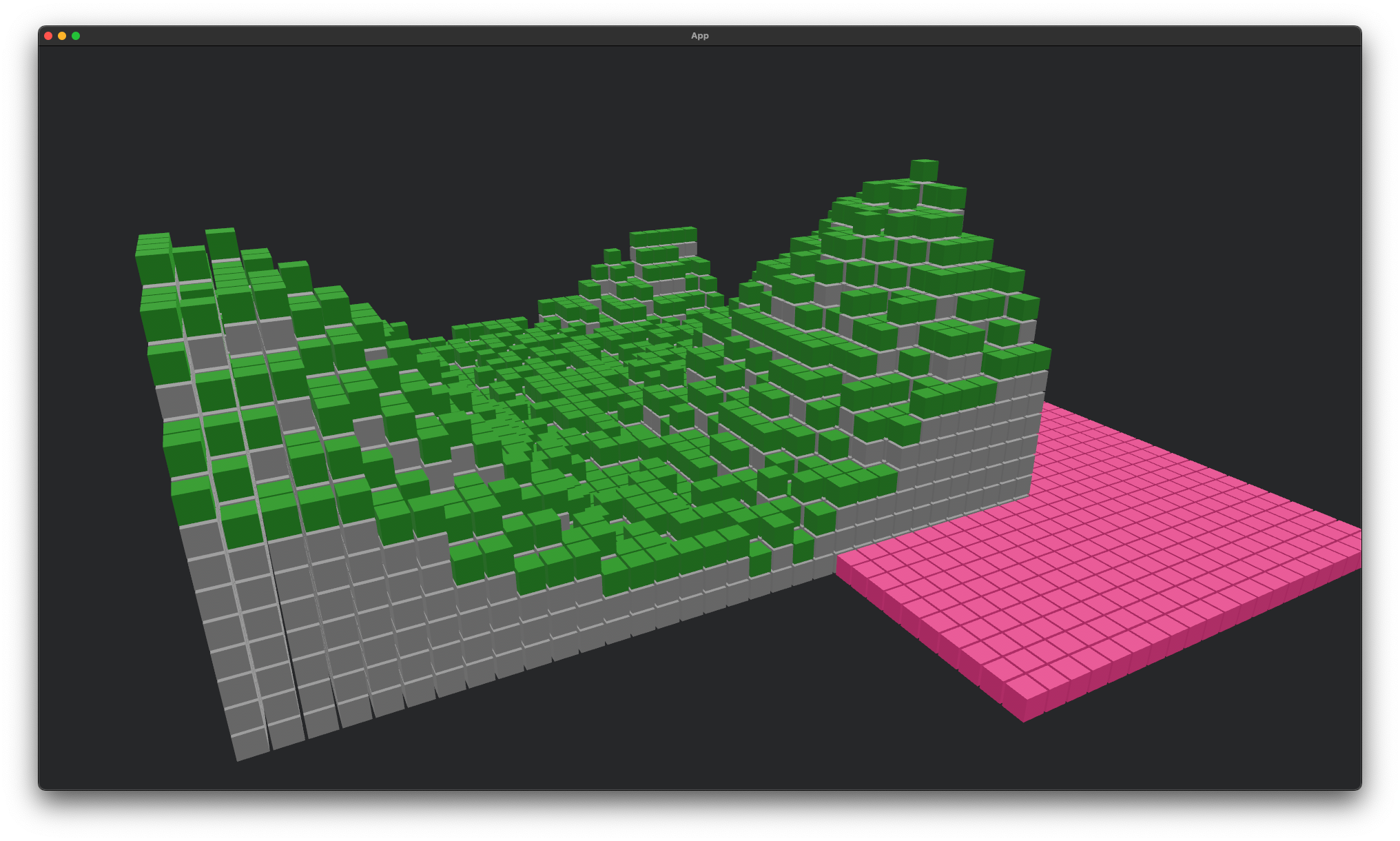

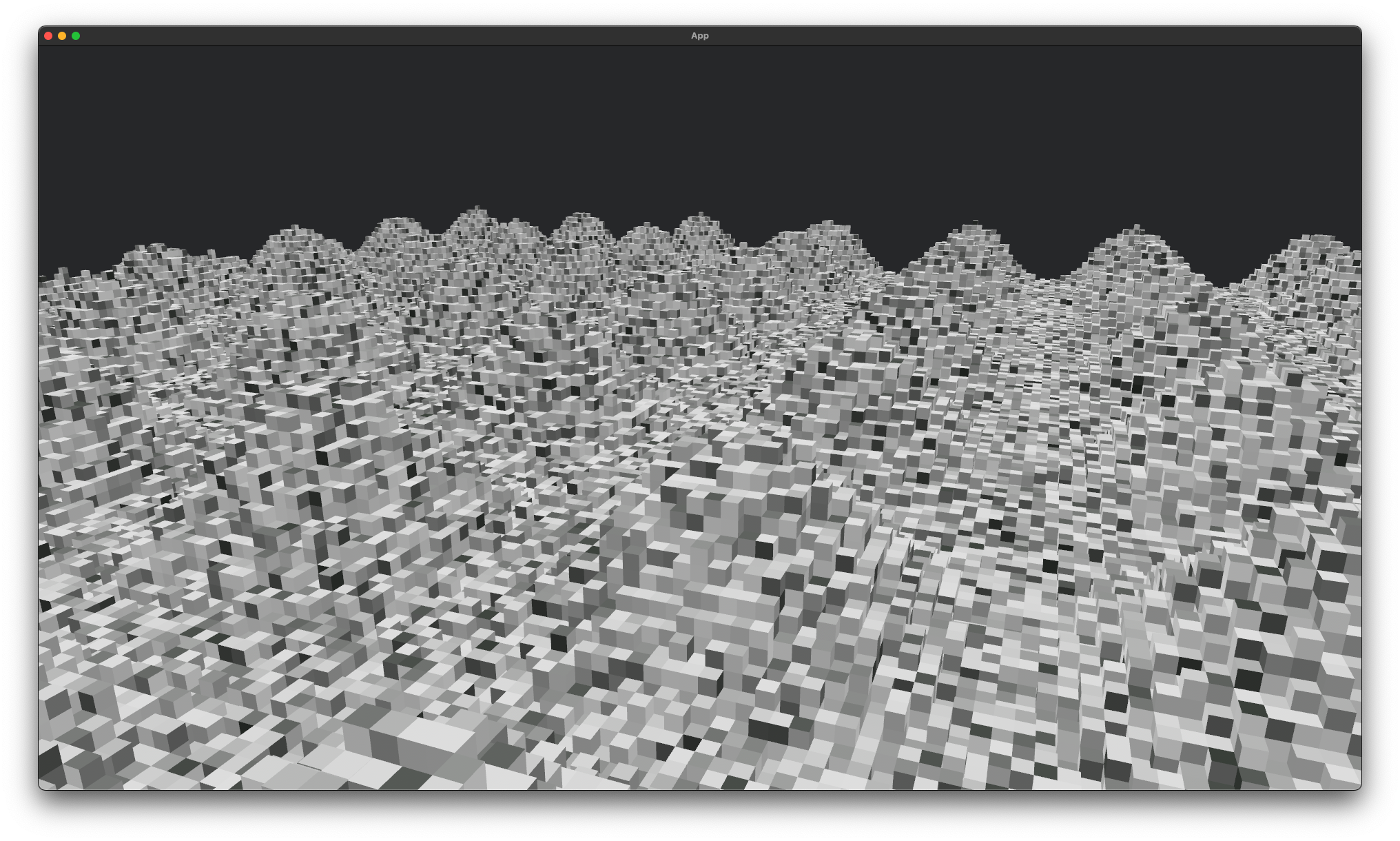

Voxel textures

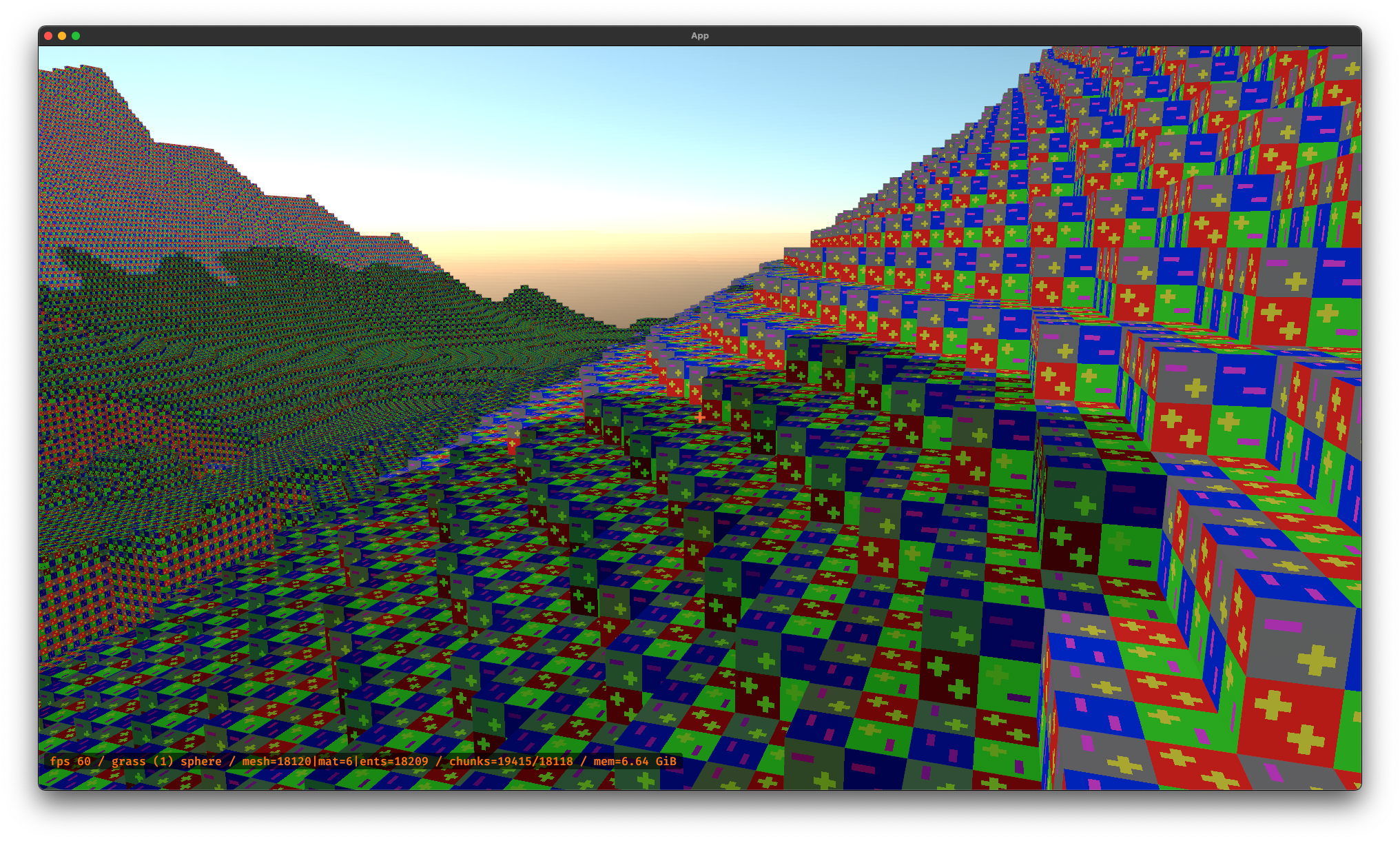

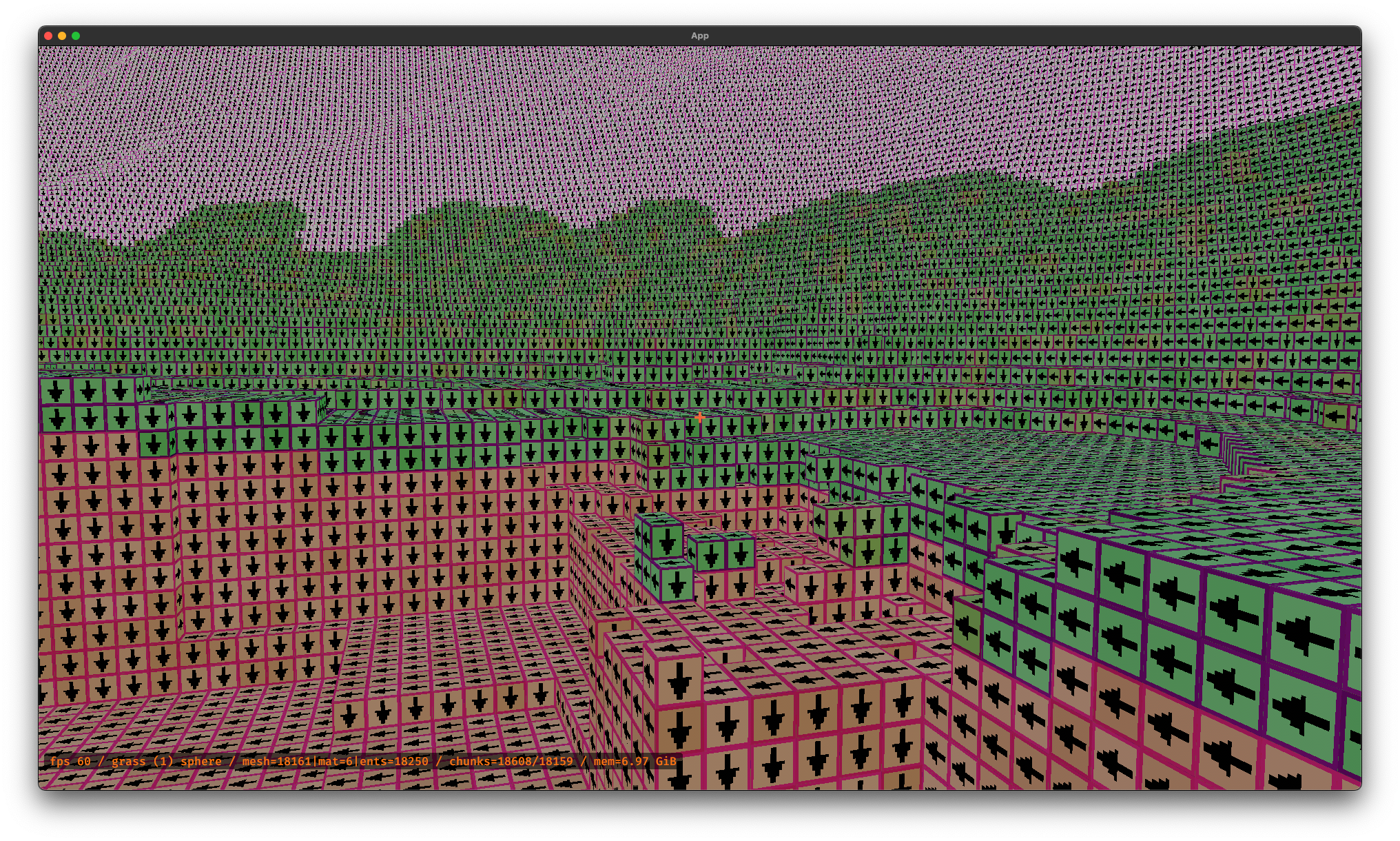

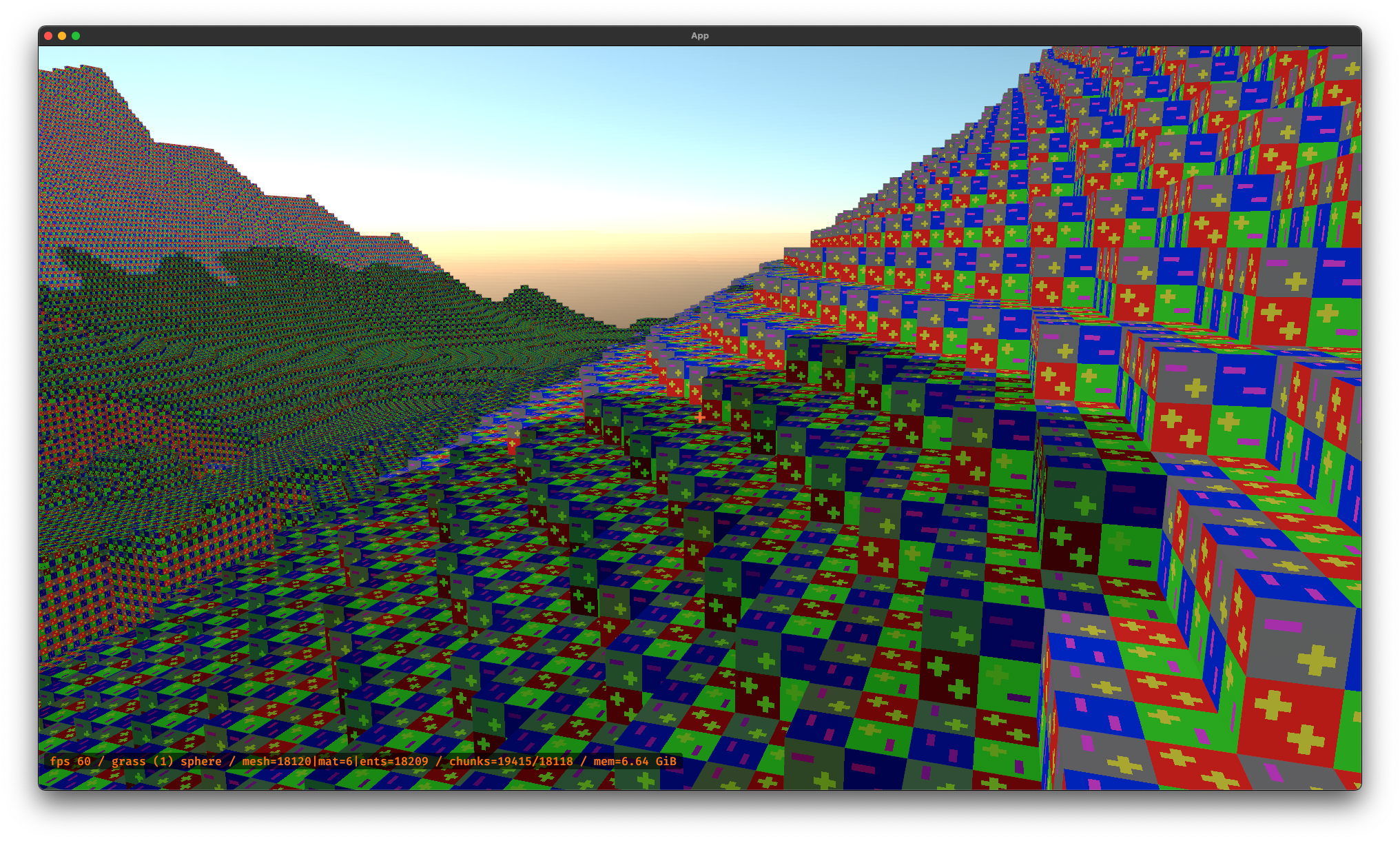

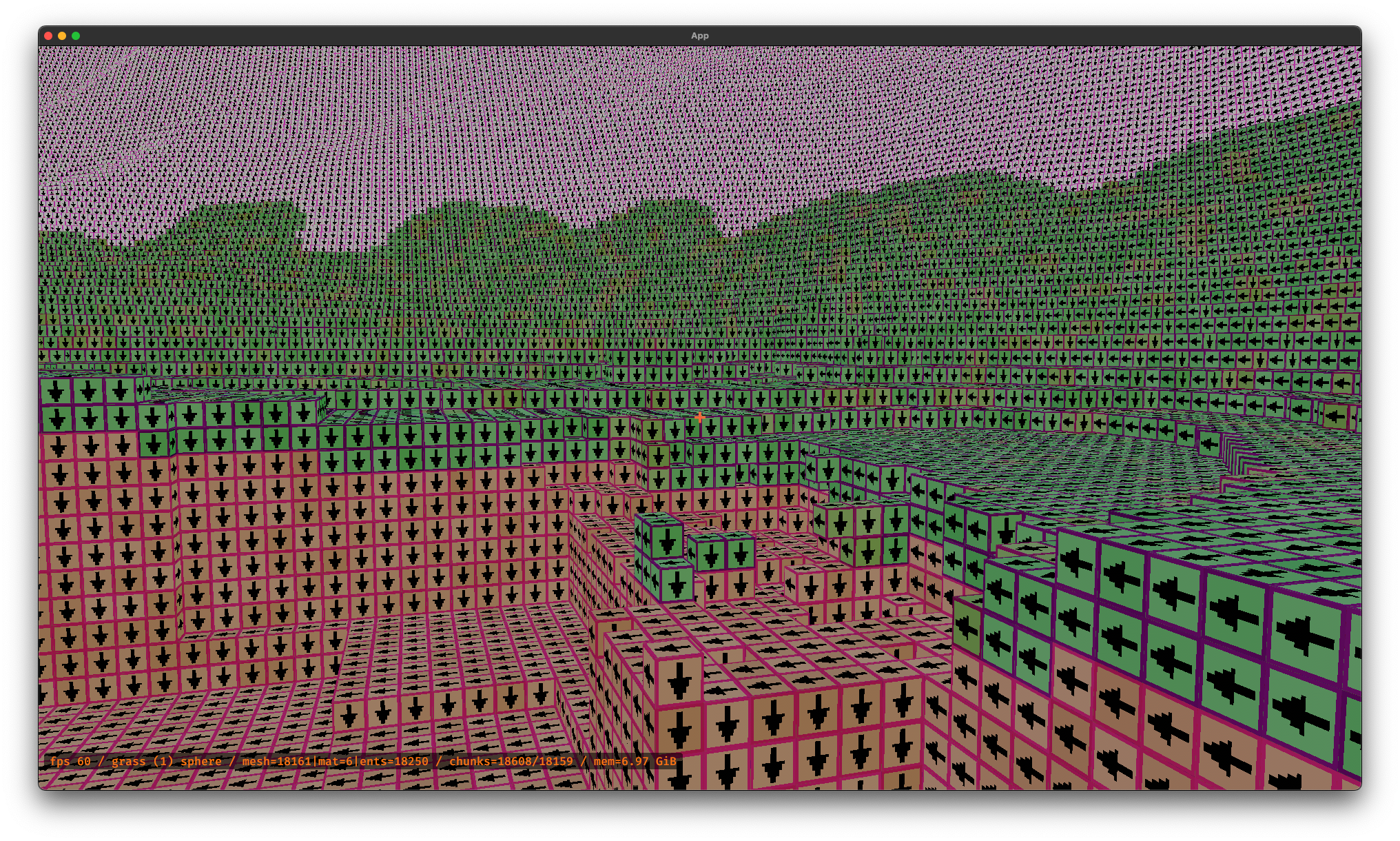

Debug textures to make sure things are working at all...

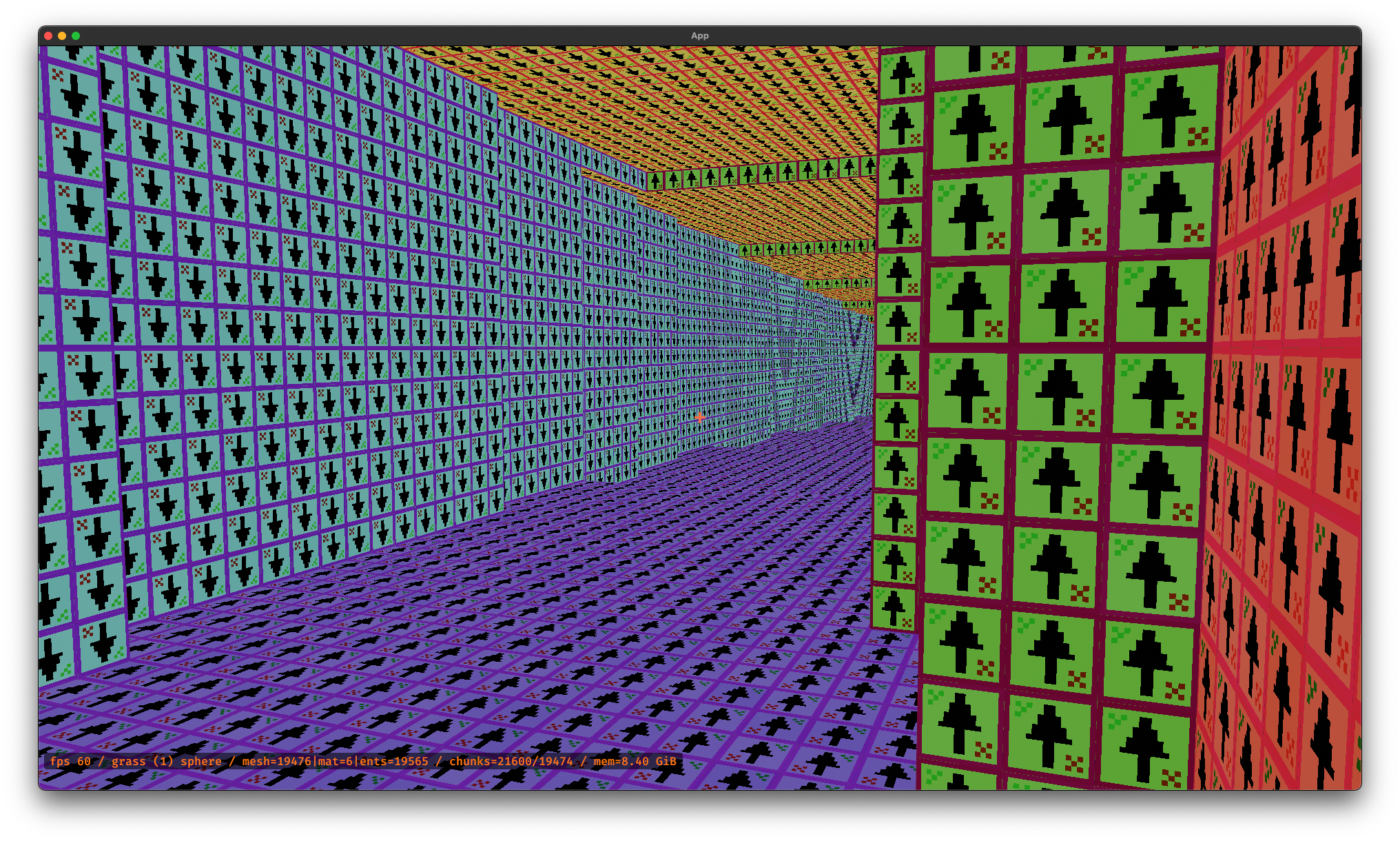

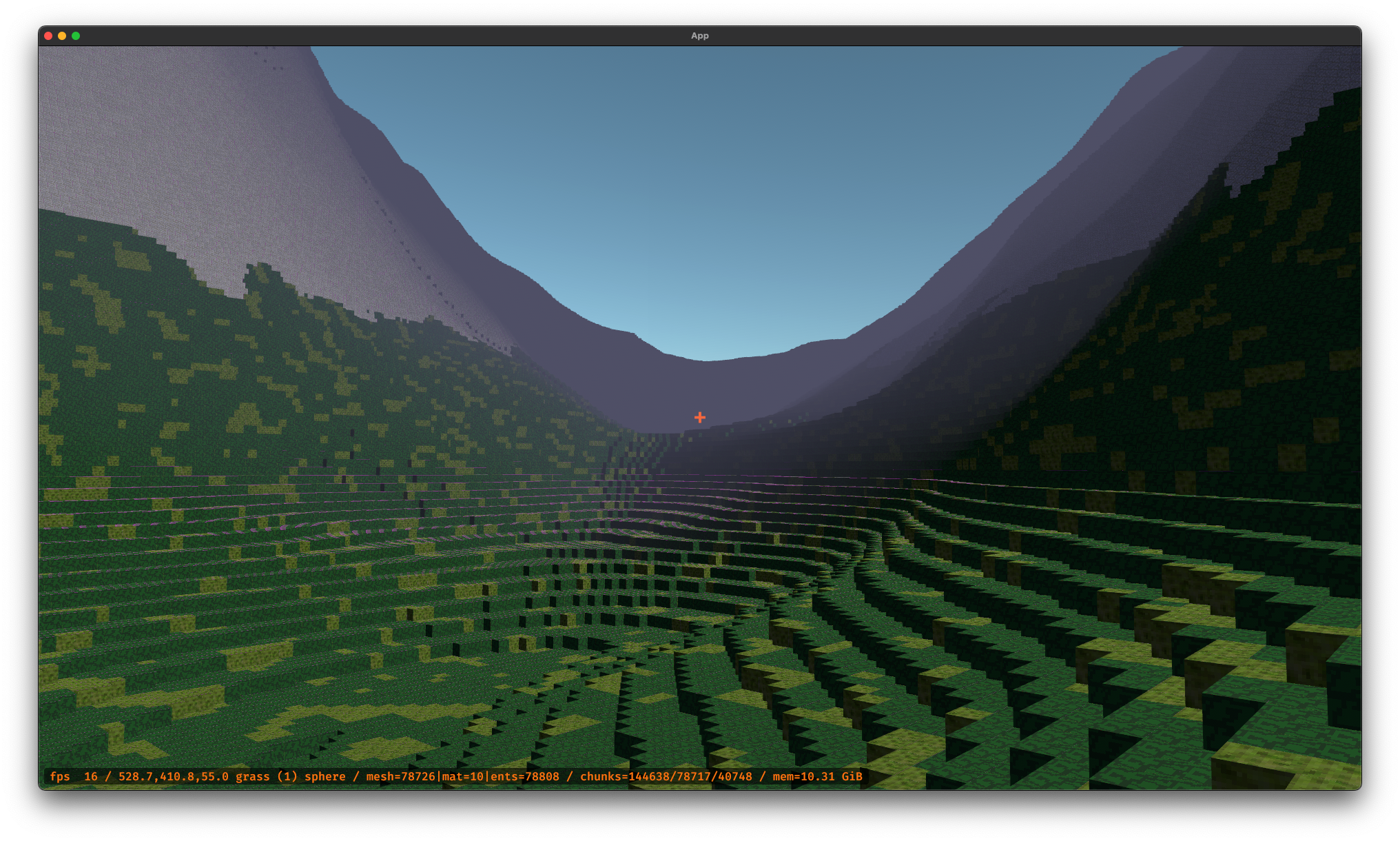

And finally working...

Debug textures to make sure things are working at all...

And finally working...

This is a bit off-topic from the usual blog posts, but I want to post it as the default user experience of YouTube Music to play disliked music is baffling to me. I simply don't understand why YouTube Music plays songs you've disliked. I could see maybe if it played them only after a certain "snooze" period of weeks, months, etc. But regardless...

I'm justifying this post as a post about considering user experience in application design 😄

First, this assumes you're using a TamperMonkey compatible browser and are willing / able to install TamperMonkey as an extension to your browser.

This can be an extremely useful tool if you want or need to make small tweaks to the behavior of a website that you frequently use.

Credit goes entirely to GrayStrider on GitHub for posting a UserScript on how to do this about 4 years prior to the writing of this article.

Here is a slightly modified version of the original gist, which includes the required UserScript comment header:

// ==UserScript==

// @name YouTube Music: skip dislikes

// @namespace http://tampermonkey.net/

// @version 2024-11-18

// @description try to take over the world!

// @author You

// @match https://music.youtube.com/*

// @icon https://www.google.com/s2/favicons?sz=64&domain=github.com

// @grant none

// ==/UserScript==

(function () {

'use strict';

const $$ = (id) => document.getElementById(id);

const $ = (className) => document.getElementsByClassName(className);

const check = () => {

// Log something so it's clear the script is active and working.

// The noise is fine for this use case.

console.log('Checking to see if the current song is disliked...');

let container = $$('like-button-renderer');

let skipBtn = $('next-button')[0];

if (container.getAttribute('like-status') === 'DISLIKE') {

skipBtn.click();

setTimeout(check, 5000);

} else {

setTimeout(check, 500);

}

};

setTimeout(check, 2500);

})();

The original references are here:

TamperMonkey supports syncing your scripts between computers and browsers. This is definitely worth setting up and only takes a minute.

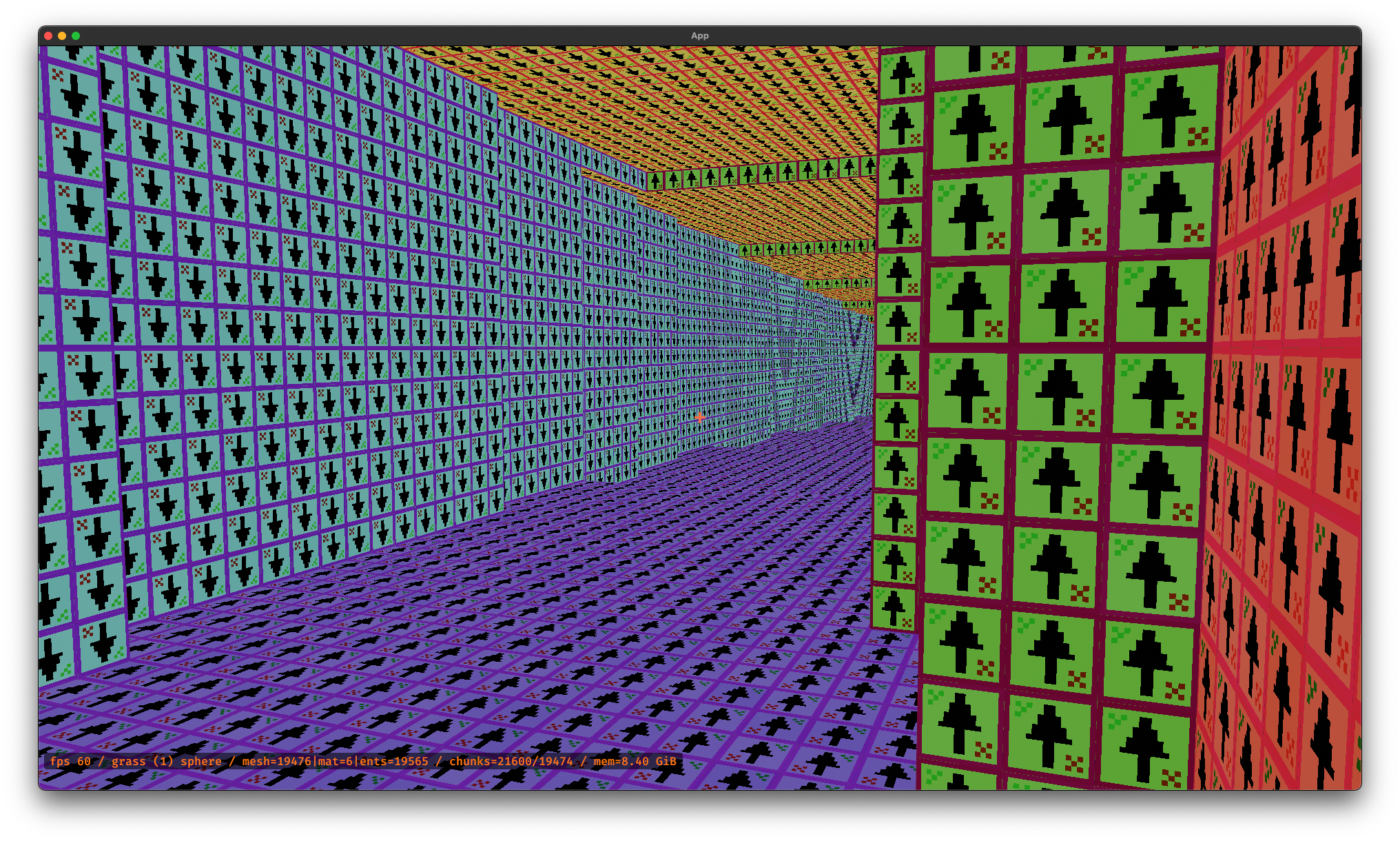

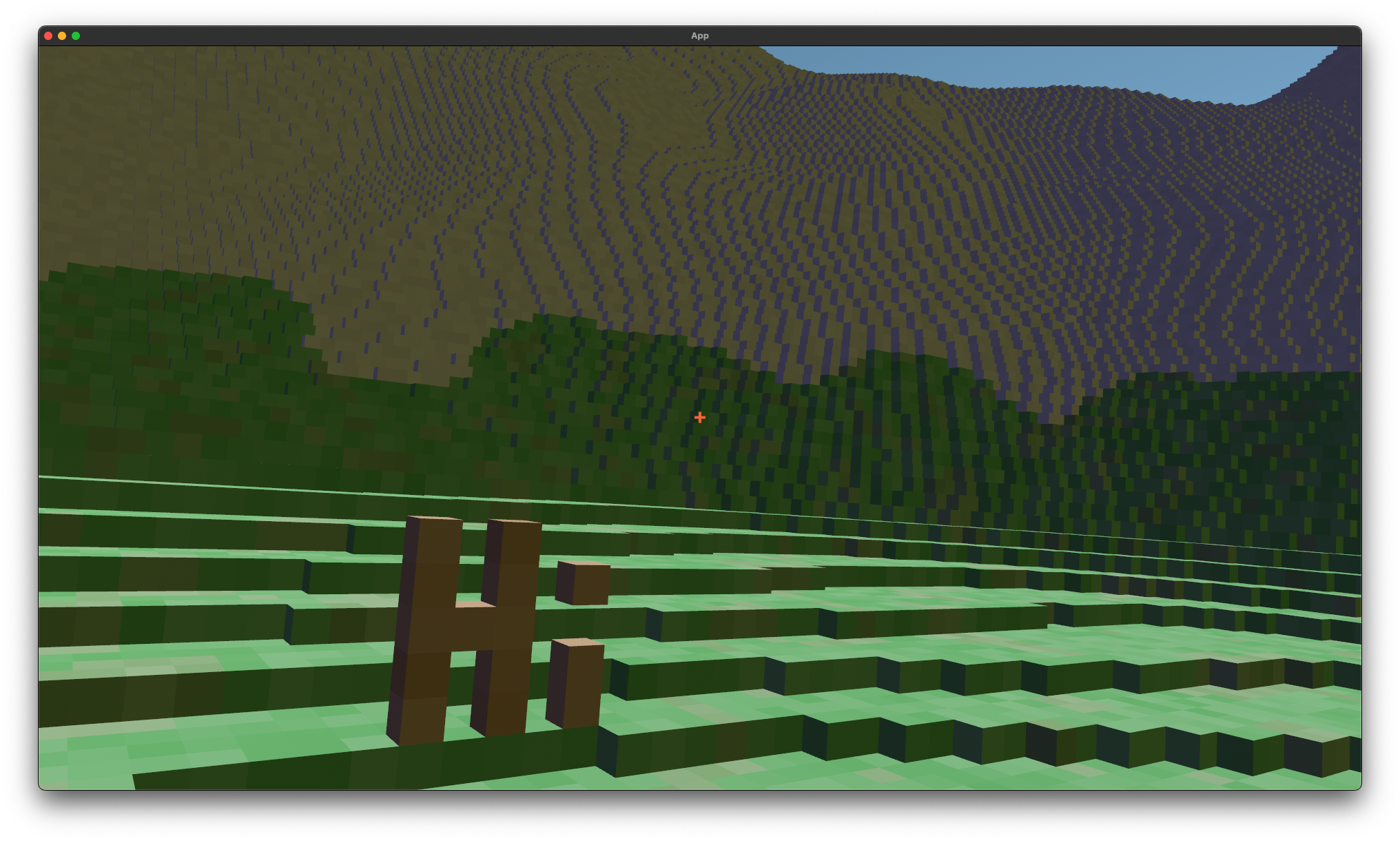

Dug a tunnel into the side of the mountain...

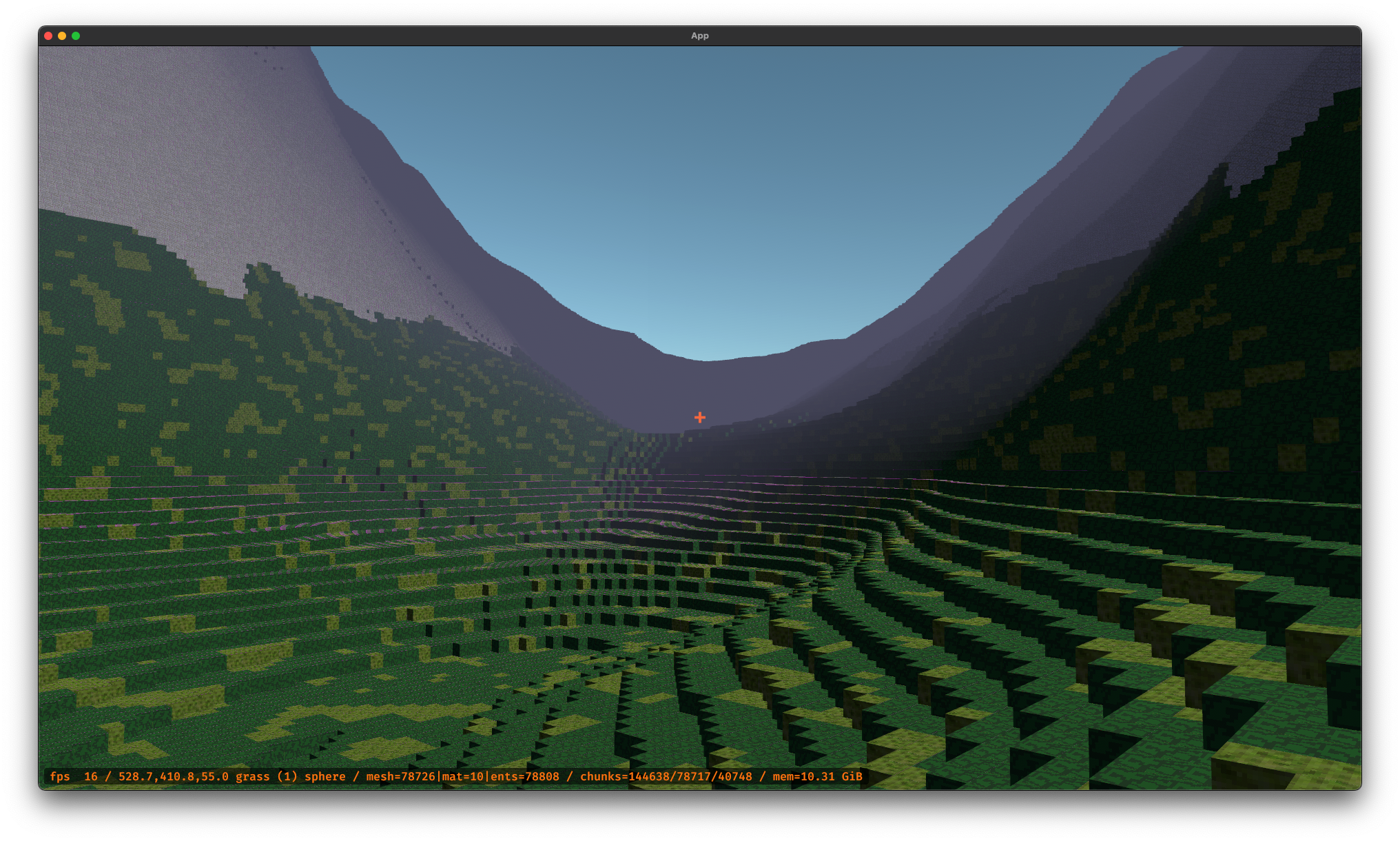

The engine now supports brushes for adding, removing, and painting multiple voxels at once.

Also added a micro heads up display at the bottom so toggling between voxel types and brushes is not fully an exercise in memorization.

There are still a few bugs and, now with larger persisted worlds, the frames-per-second (fps) is quickly dropping out of the ~60 range. Nonetheless, the engine is feeling more capable.

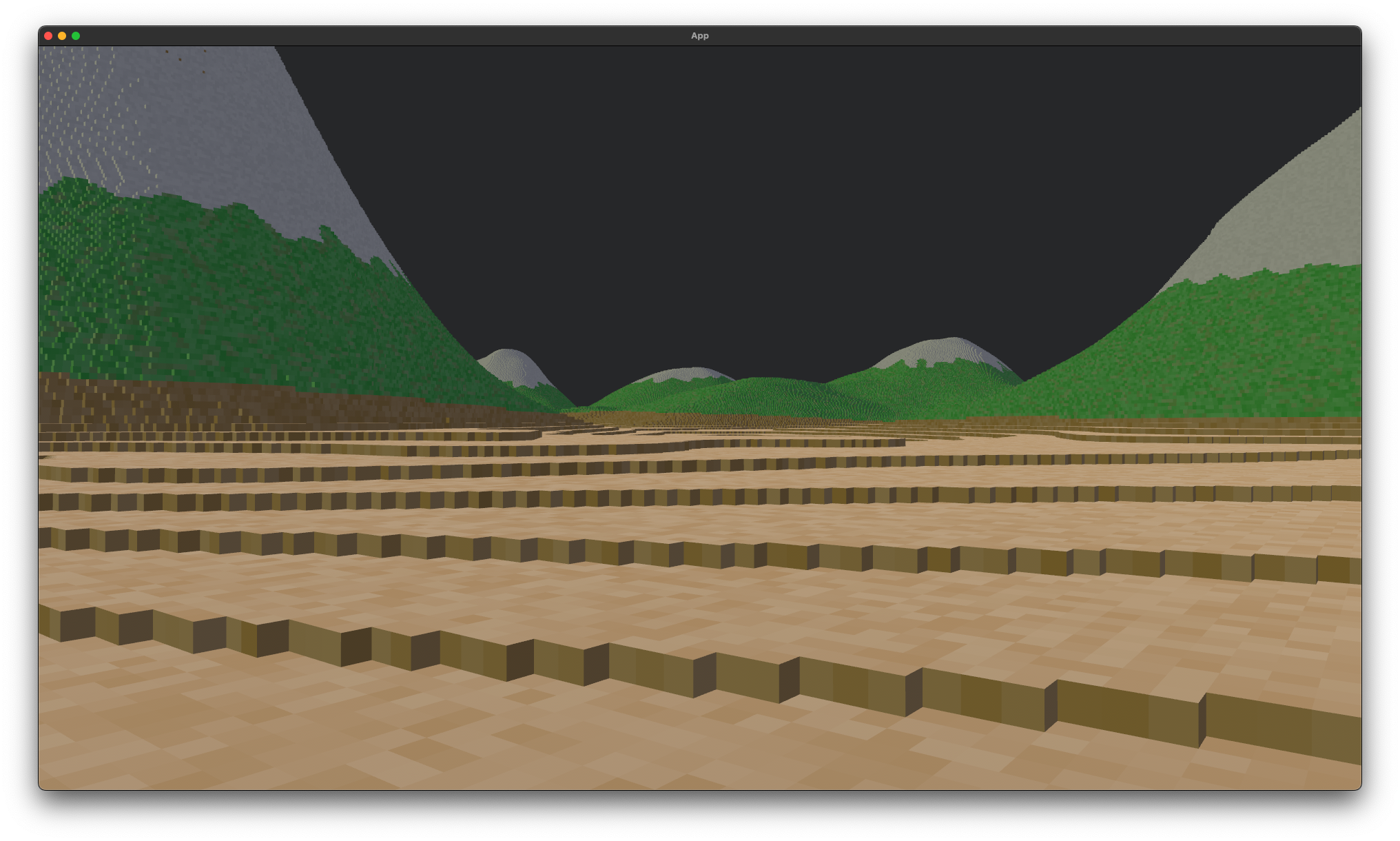

Working a bit on terrain generation. Turns out it can be a lot more fun working on this in native desktop with Rust rather than WebGL given the runtime limitations of the latter.

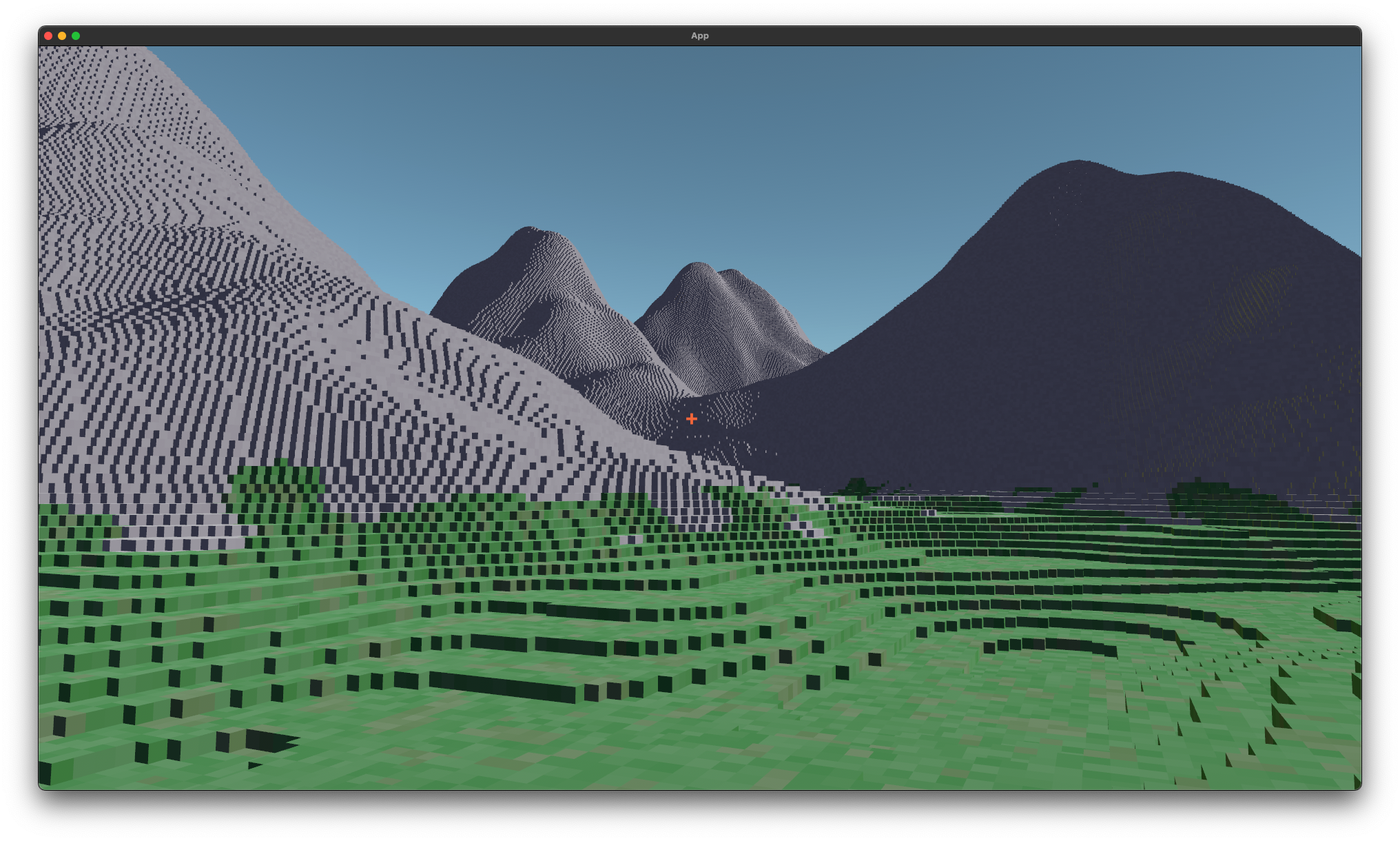

Still working on voxel rendering in Bevy. Restarted from fresh as I've learned a bit more since last time...

Been working on a lot of different experiments lately. Merging my recent interest in learning Bevy, Rust, and WASM with my long-time interest in voxel rendering, I've been working on an experimental program to convert a Quake 2 BSP38 map file into voxelized representation.

The above is slow and inefficient, but it's an interesting starting point as it's all running in WASM using Bevy.

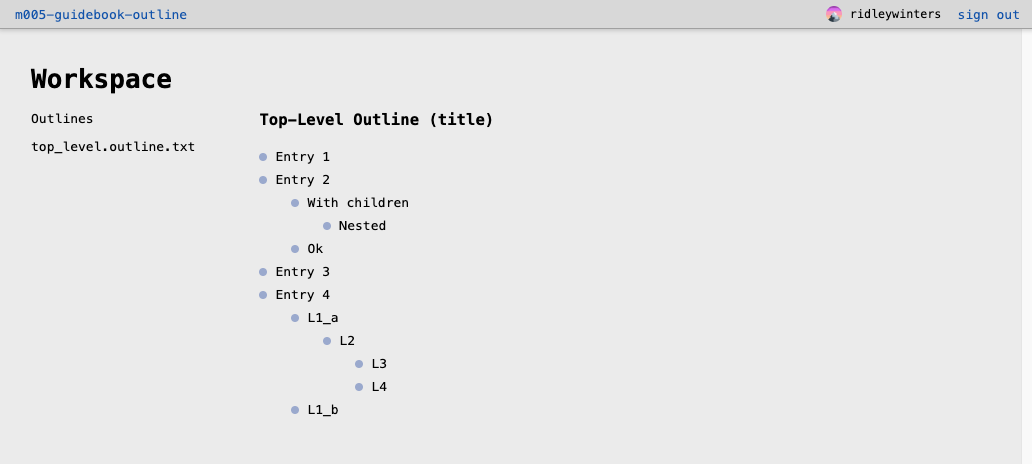

Working on another guidebook-outline prototype.

This loads an outline directly from a GitHub repo (once the user has authenticated with GitHub). The below just a proof of concept.

Using GitHub as the backing store is a fundamental premise for the app as it gives the user complete control over their data.

The next sorts of things to build into the prototype are:

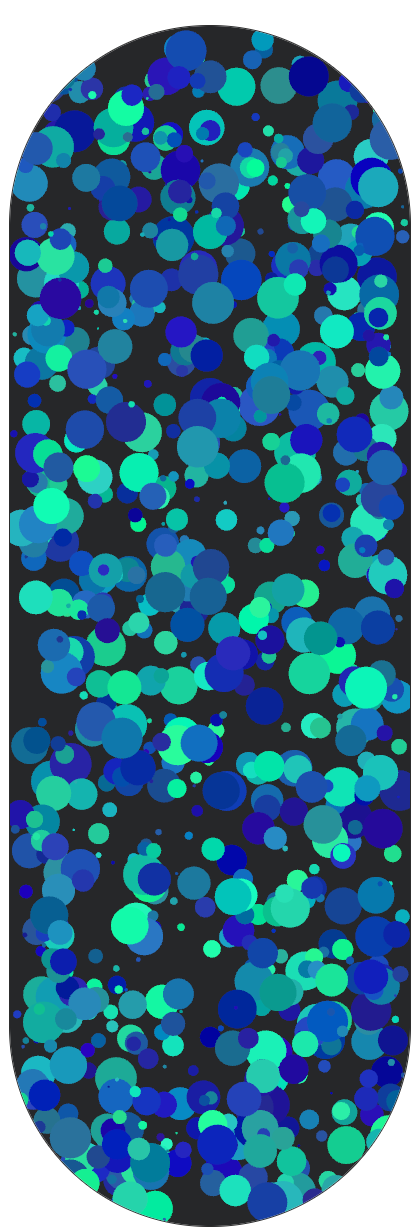

Added an experiment of rendering with Bevy using WASM. It's nothing too exciting as I'm new to Bevy, WASM, and still relatively inexperienced with Rust!

A few notes on the development:

I wanted to host this experiment (and future ones) within the context of pages within the Docusaurus app. I certainly do not know the "best" way to do this yet, but one definite constraint to pass the canvas id to the WASM module at startup rather than hard-coding it in the WASM and browser.

As far as I can tell, the WASM init function does not take arguments, therefore the startup is exposed via a separate wasm_bindgen exported function called start.

In the Rust snippet below, you can see we do nothing in main and explicitly pass in an id to the WindowPlugin in a separate start function.

fn main() {

// The run() entrypoint does the work as it can be called

// from the browser with parameters.

}

#[wasm_bindgen]

pub fn start(canvas_id: &str) {

let id = format!("#{}", canvas_id);

App::new()

.add_plugins(DefaultPlugins.set(WindowPlugin {

primary_window: Some(Window {

canvas: Some(id.into()),

..default()

}),

..default()

}))

.add_systems(Startup, setup)

.add_systems(

Update,

(

move_ball, //

update_transforms,

),

)

.run();

}

The JavaScript code to bootstrap this looks like this:

const go = async () => {

let mod = await import(moduleName);

await mod.default();

await mod.start(canvasID);

};

go();

But admittedly the above is not the actual JavaScript code for hosting the WASM module...

This is workaround code. It "works" but I'm sure there is a correct way to handle this that I was not able to discover!

There's something I don't understand about WASM module loading and, more importantly, reloading/reuse. This is problematic in the context of a Single Page Application (SPA) like Docusaurus where if you navigate to page ABC, then to page XYZ, then back to ABC, any initialization that happened on the first visit to page ABC will happen again on the second visit. In other words, I'm not sure how to make the WASM initalization idempotent.

If there's a correct way to...

...I'd enjoy learning how!

Docusaurus also has logic for renaming, bundling, rewriting, etc. JavaScript code used on the pages. I'm not sure what the exact logic of what it does, but end of the day, I did not want Docusaurus manipulating the JS generated by the WASM build process.

Admittedly this is a bit of laziness on my part for not really understanding what Docusaurus does and how best to circumvent it.

I worked around the reload problems and script mangling with a custom MDX Component in Docusaurus that:

<script> element so Docusaurus can't modify what it doesexport function CanvasWASM({

id,

module,

width,

height,

style,

}: {

id: string,

module: string,

width: number,

height: number,

style?: React.CSSProperties,

}) {

React.useEffect(() => {

// We create a a DOM element since Docusaurus ends up renaming /

// changing the JS file to load the WASM which breaks the import

// in production. This is pretty hacky but it works (for now).

const script = document.createElement('script');

script.type = 'module';

script.text = `

let key = 'wasm-retry-count-${module}-${id}';

let success = setTimeout(function() {

localStorage.setItem(key, "0");

}, 3500);

const go = async () => {

try {

let mod = await import('${module}');

await mod.default();

await mod.start('${id}');

localStorage.setItem(key, "0");

} catch (e) {

if (e.message == "unreachable") {

clearTimeout(success);

let value = parseInt(localStorage.getItem(key) || "0", 10);

if (value < 10) {

console.log("WASM error, retry attempt: ", value);

setTimeout(function() {

localStorage.setItem(key, (value + 1).toString());

window.location.reload();

}, 20 + 100 * value);

} else {

throw e;

}

}

}

};

go();

`.trim();

document.body.appendChild(script);

}, []);

return <canvas id={id} width={width} height={height} style={style}></canvas>;

}

In case anyone on the internet runs into this and stumbles upon this page...

I also wasted quite a bit of time on this problem:

.gitattributes file in all my repos to store generated files using git LFS*.wasm to store those in LFSThis meant my code was working locally but when I was trying to load the WASM files on the published site, the LFS "pointer" text file was being served rather than the binary WASM file itself. It took me a while to figure this out. Ultimately the fix was to remove the .gitattributes from the GitHub Pages repo so LFS is not used on the published site. (Aside: this might be a good reason to consider hosting this site via another platform, but I'll leave that for another day!)

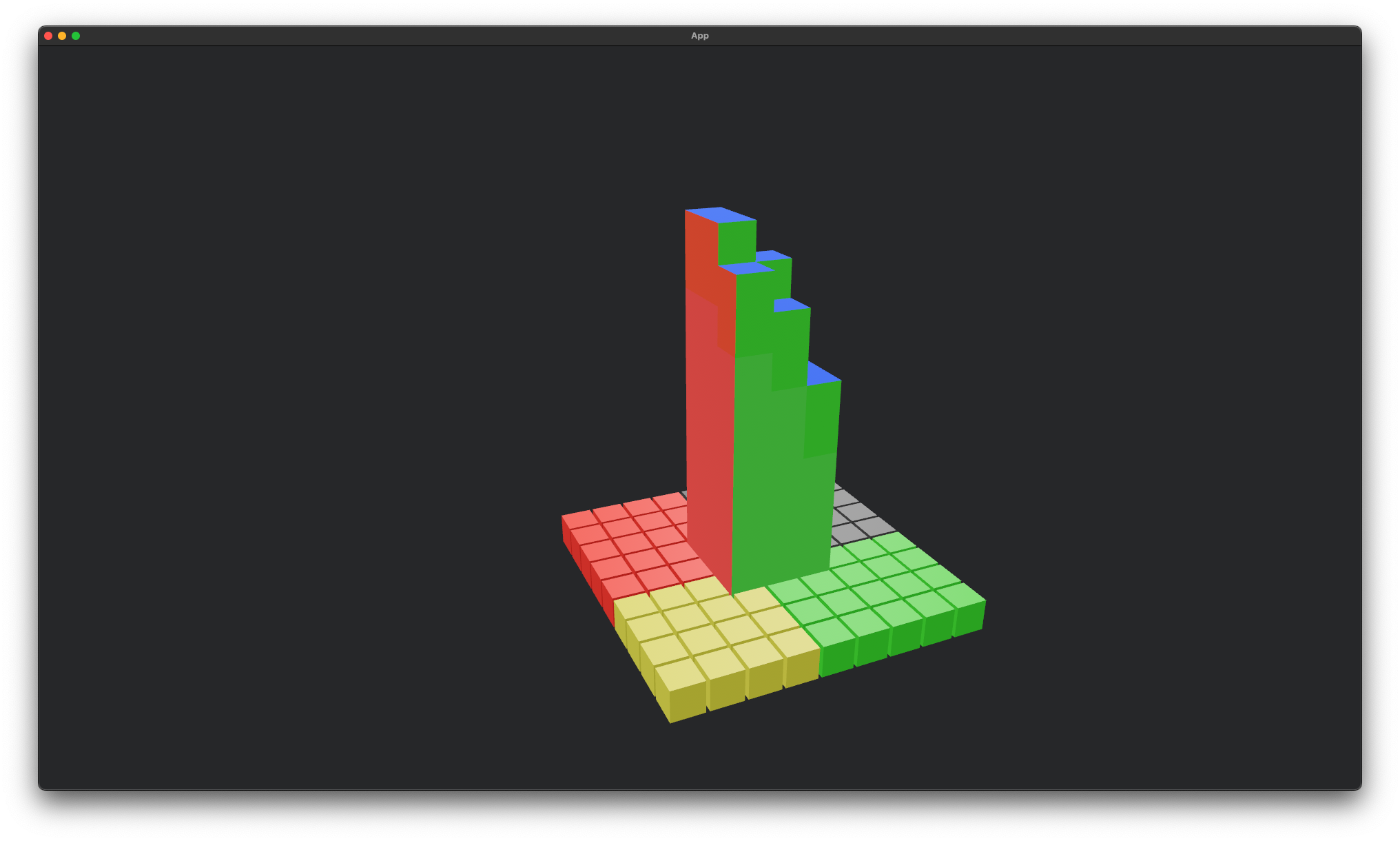

Rewrote the WGPU render pass for rendering the voxels to use an instance buffer than generates 36 vertices per instance (i.e. vertices for the 6 * 2 = 12 triangles of a voxel).

I'm not sure if this is going to be the right approach, but I wanted to at least experiment with it.

Update: naively swapping the instance-based rendering in for the more traditional triangle renderer was MUCH slower. I'm not confident this is going to be a better rendering method.

The approach used in the above video is fairly naive. Each voxel is given a vec3<f32> position and vec3<f32> color. The buffer itself has one entry for each filled voxel. It is instanced 36 times to generate the full cube for each voxel. So 36 instances and and 6 x 32 = 192 bits = 24 bytes per instance.

Given that each voxel is a cube, this could be optimized to figure out the view direction and draw only the 3 faces that are oriented towards the camera. Thus we could reduce the instancing down to 3 quads = 12 vertices. It should be easy to do this as the shader can read the left or right face, top or bottom, and front or back: it can know which for each of those three by simply looking if the x, y, z of the view direction is positive or negative.

Chunks are currently 32x32x8. Or 2^5 x 2^5 x 2^3. That means each chunk needs only 13-bits to storage the location of a voxel within a chunk. I could send each "instances" as 13-bits plus a vec3 translation for the whole chunk. Let's round that up to a u16 per voxel. I could make the chunks 16x16x8 and limit each chunk to a map of 128 values thus compacting both the position and color into a u16. For to leave room for flexibility, I'll assume a u32 could hold all the per-voxel info in a 32x32x8 chunk. That's 32k per chunk. Given 512MB reserved for chunks, that's 16k chunks. That's 128x128 horizontally assuming a depth of 1. If we assume we need an average chunk depth of 4 (which seems pretty agressive), that's 64x64 chunks. If we assume we load chunks equally in front and behind the player, that's a view distance of 32 chunks. Each chunk has 32 voxels and there are 4 voxels per meter, there's a view distance of 256 meters -- roughly a quarter of a kilometer.

There's also a question of sending chunks where only the visible voxels are sent. This would be more efficient on the surface, as it saves a lot of space for mostly empty chunks. However, it makes modifications more difficult: the WGPU buffers are not resizable and there's not a fixed position-to-index mapping function. It might be nice to support both: optimize a chunk that hasn't been modified for a while and leave recently modified chunks as the full voxel space.