Voxel textures

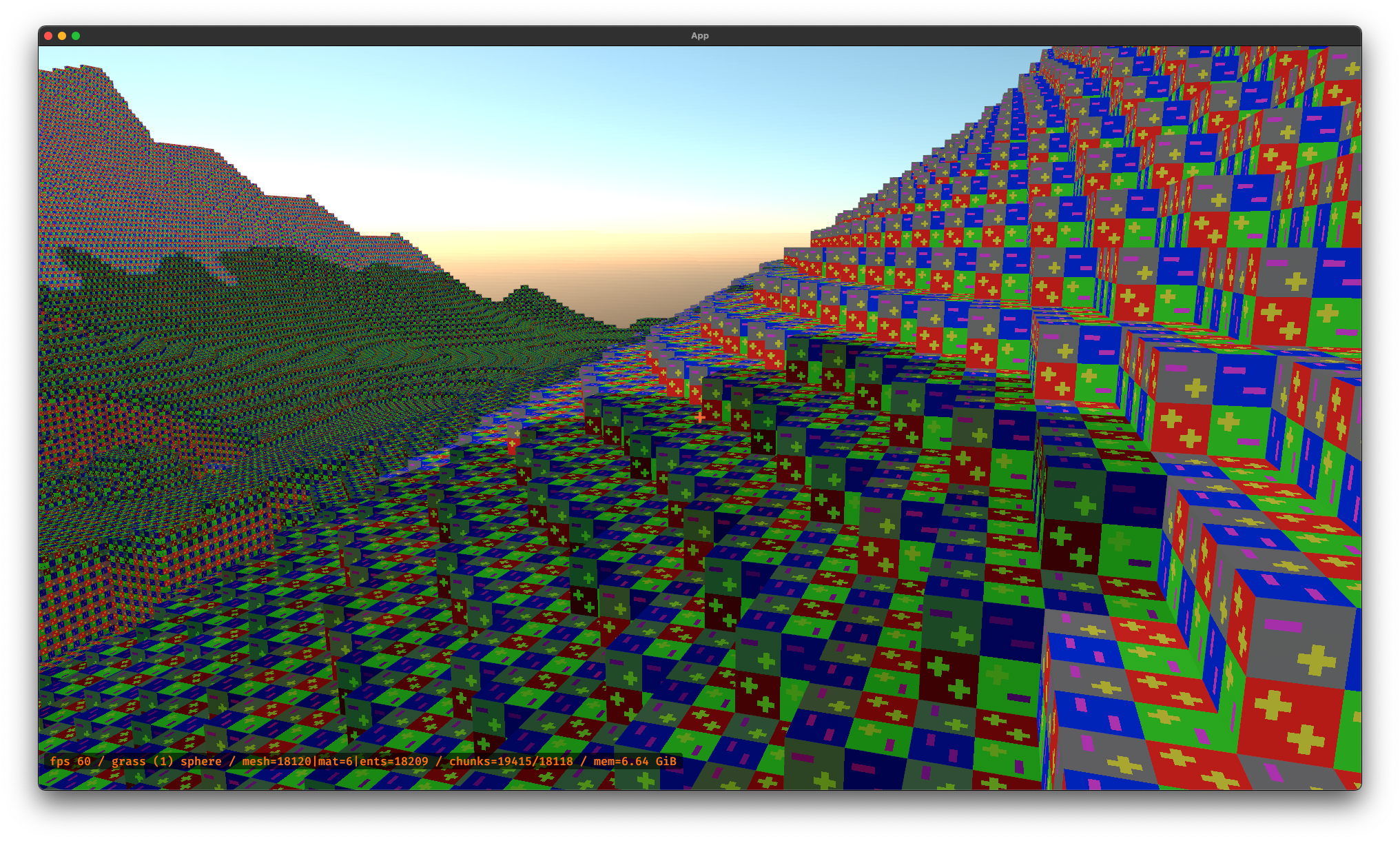

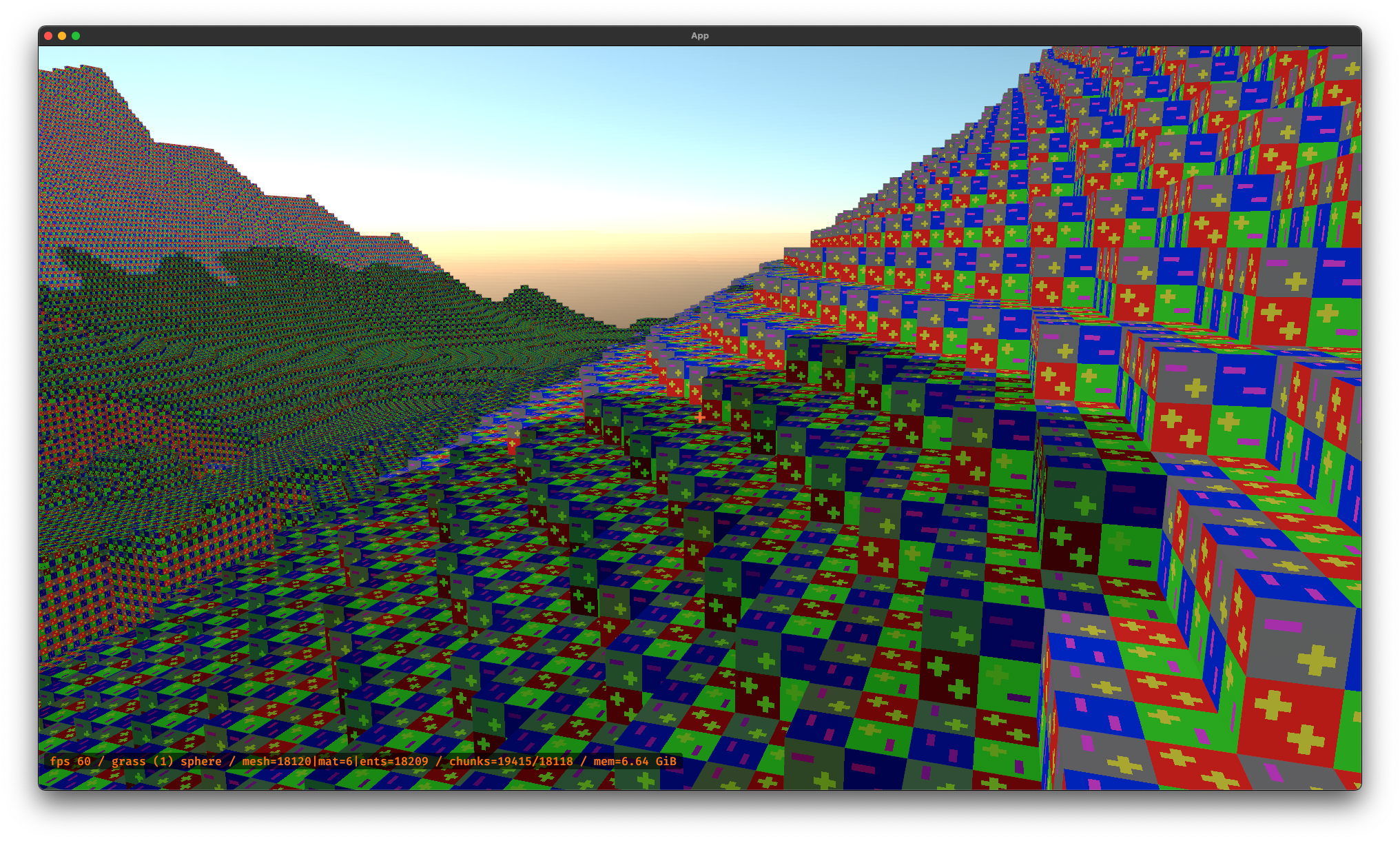

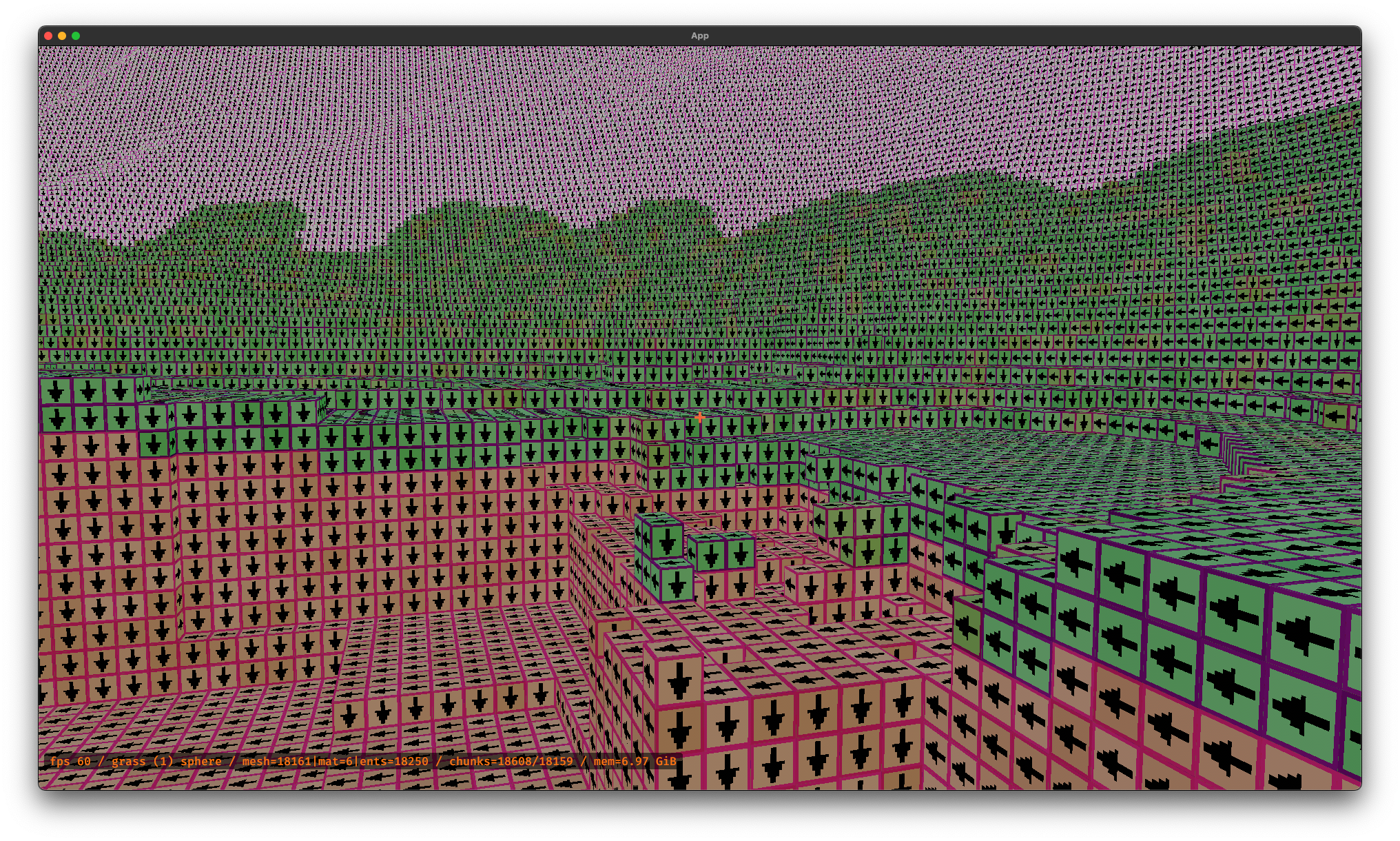

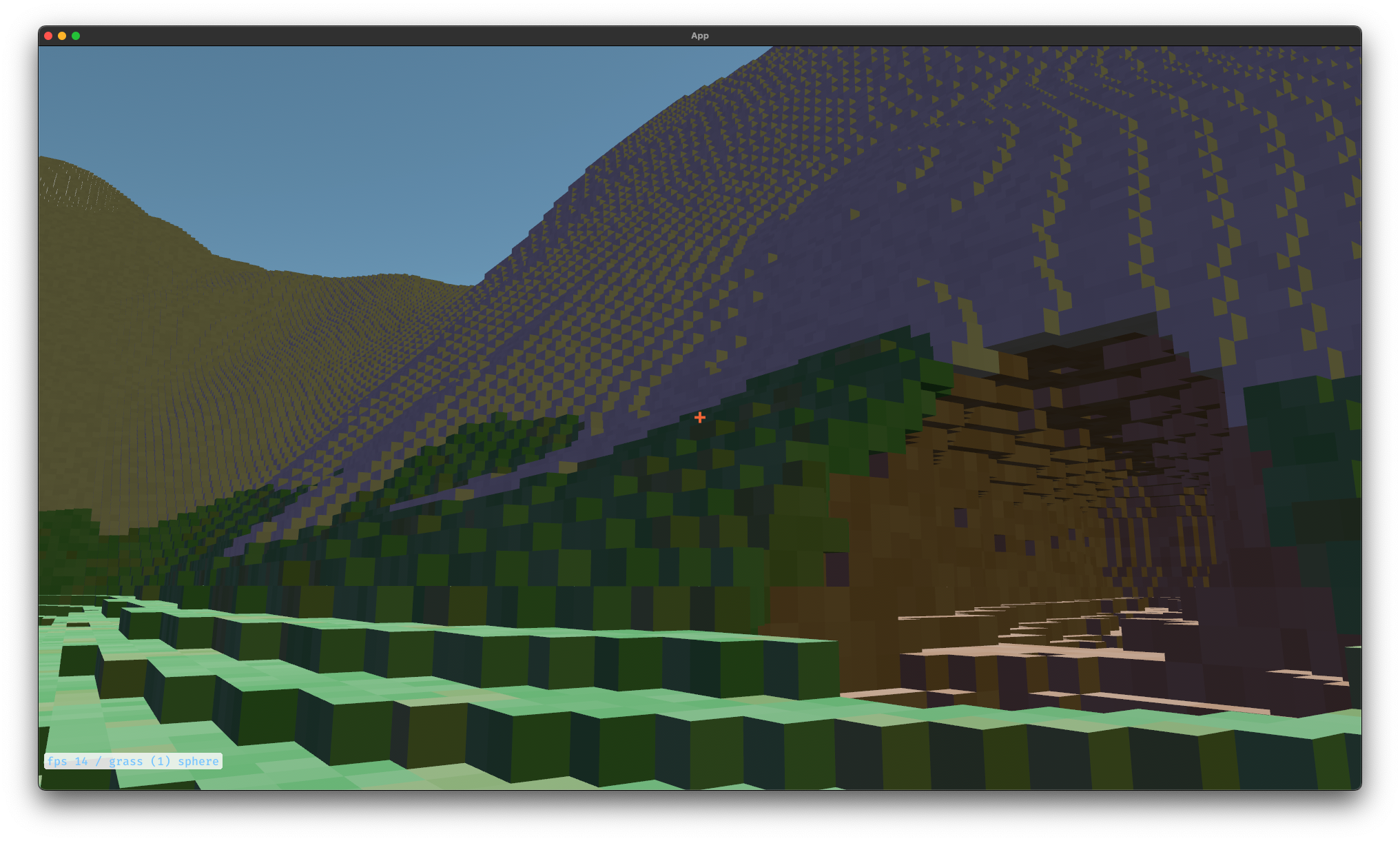

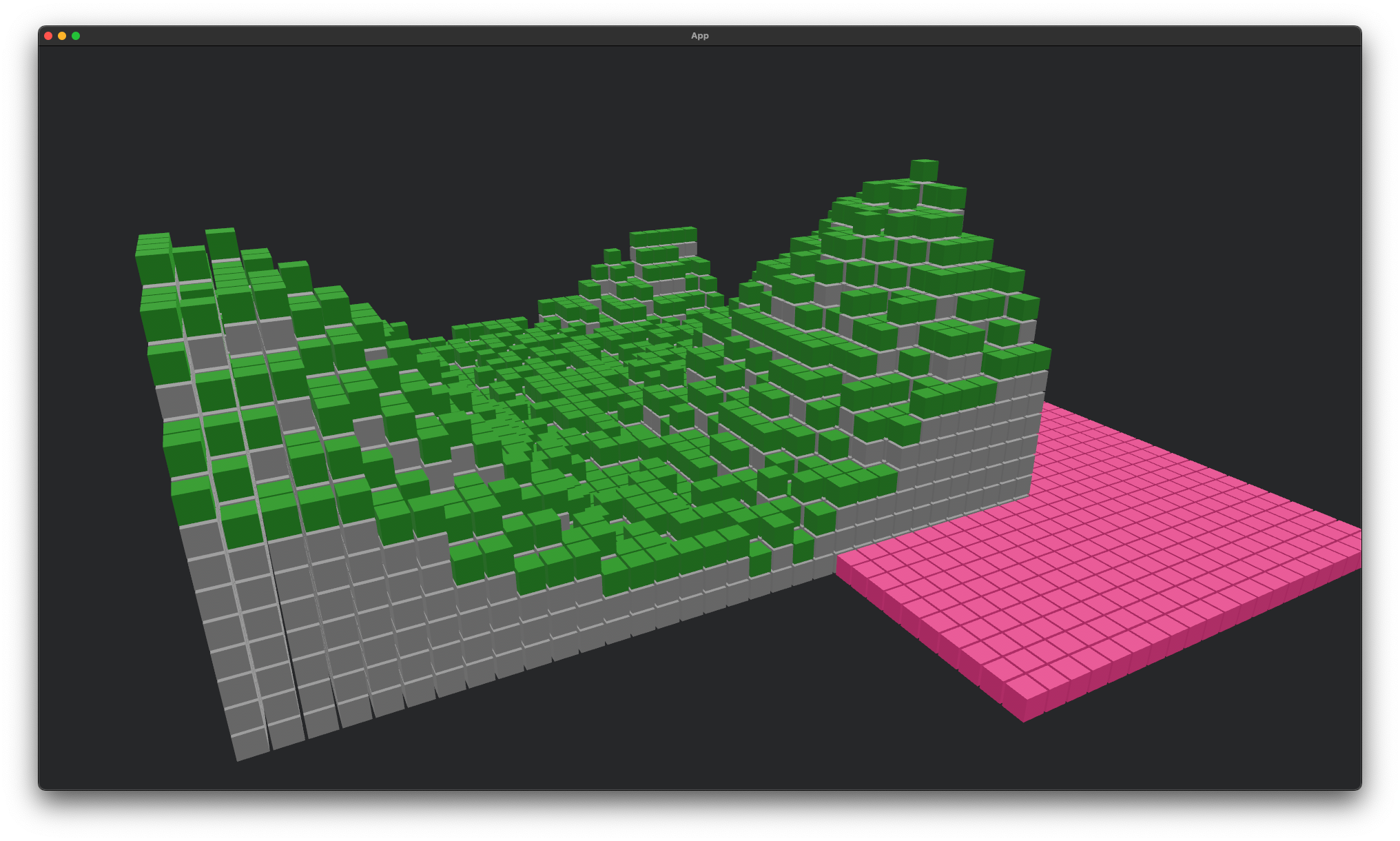

Debug textures to make sure things are working at all...

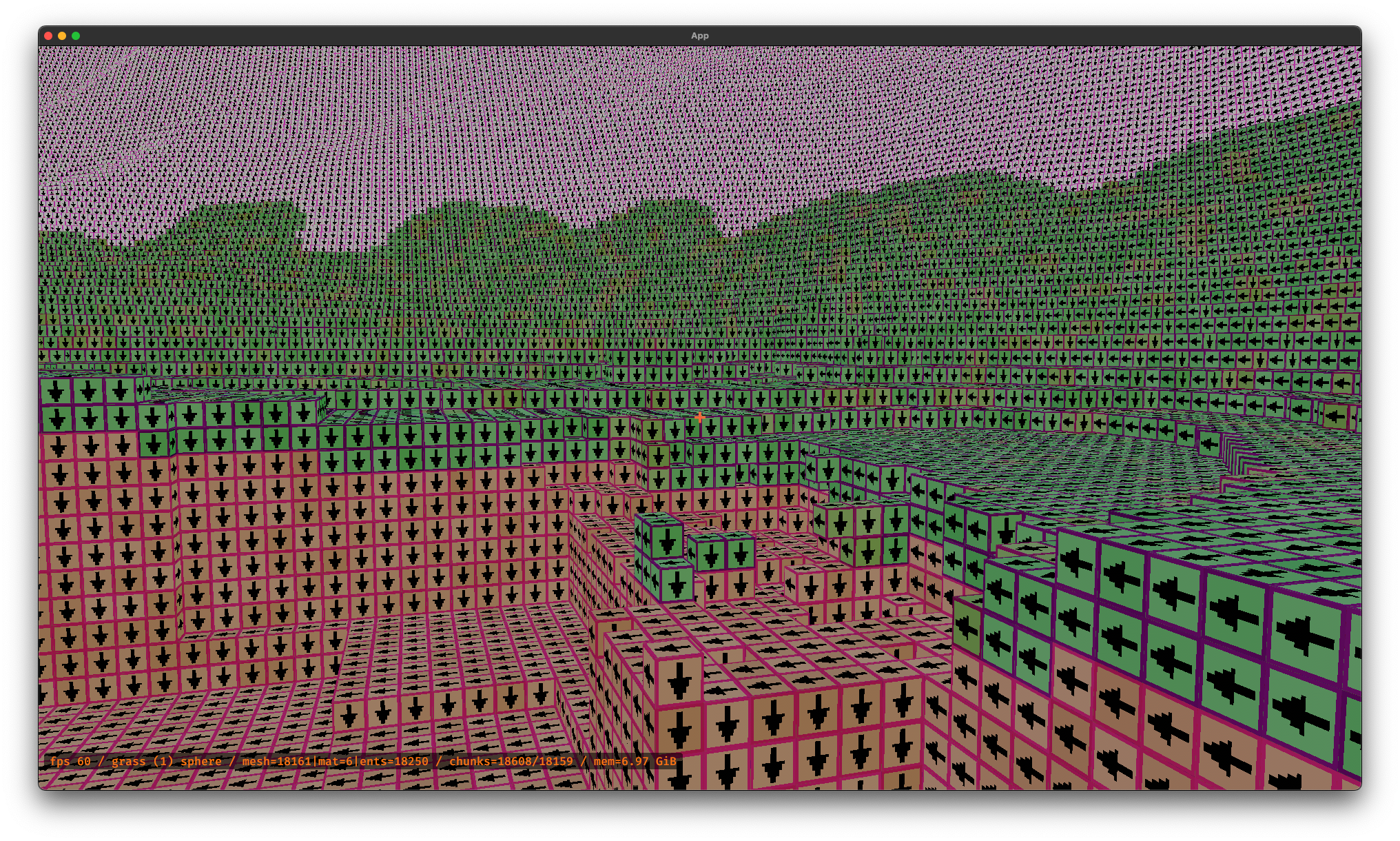

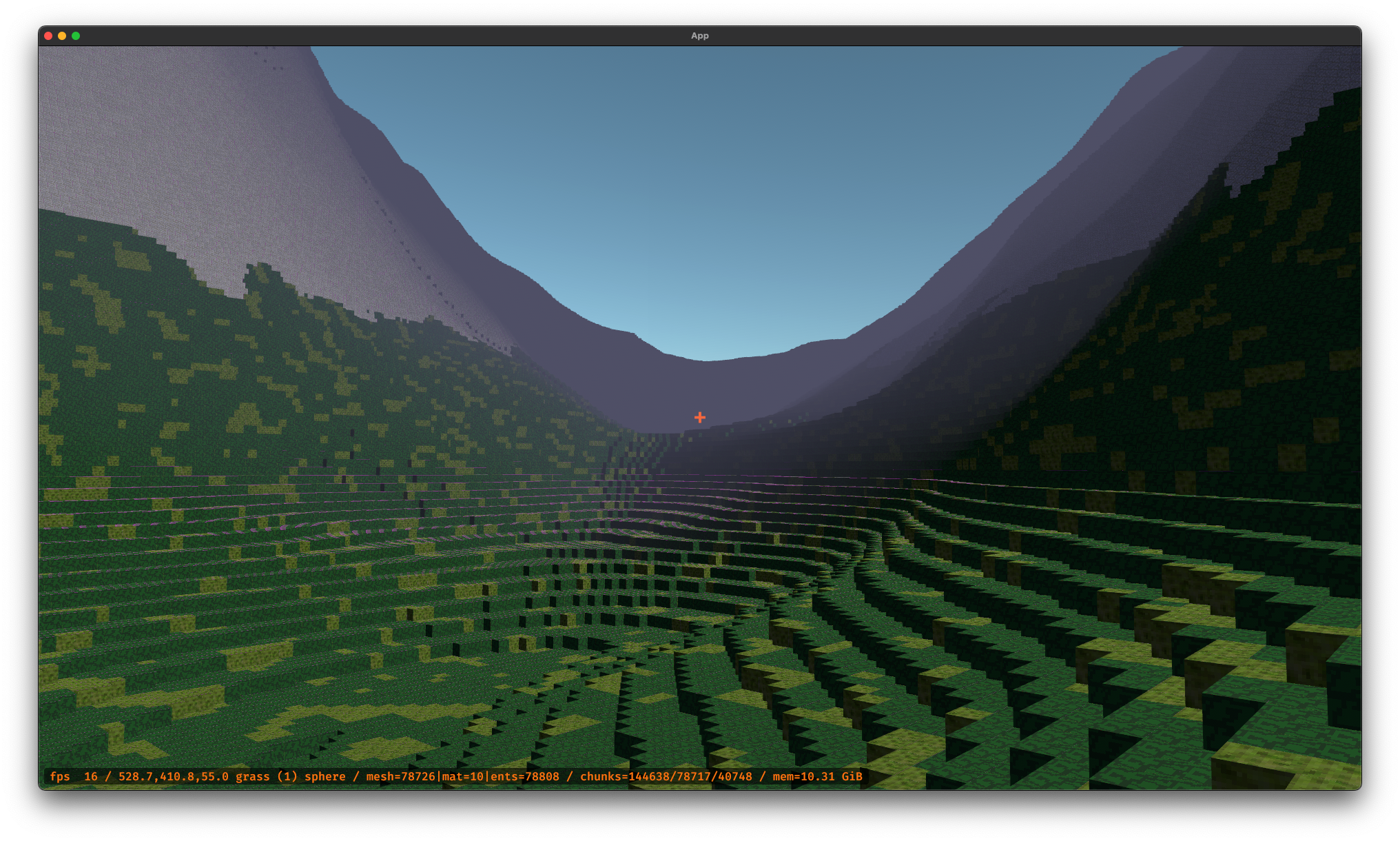

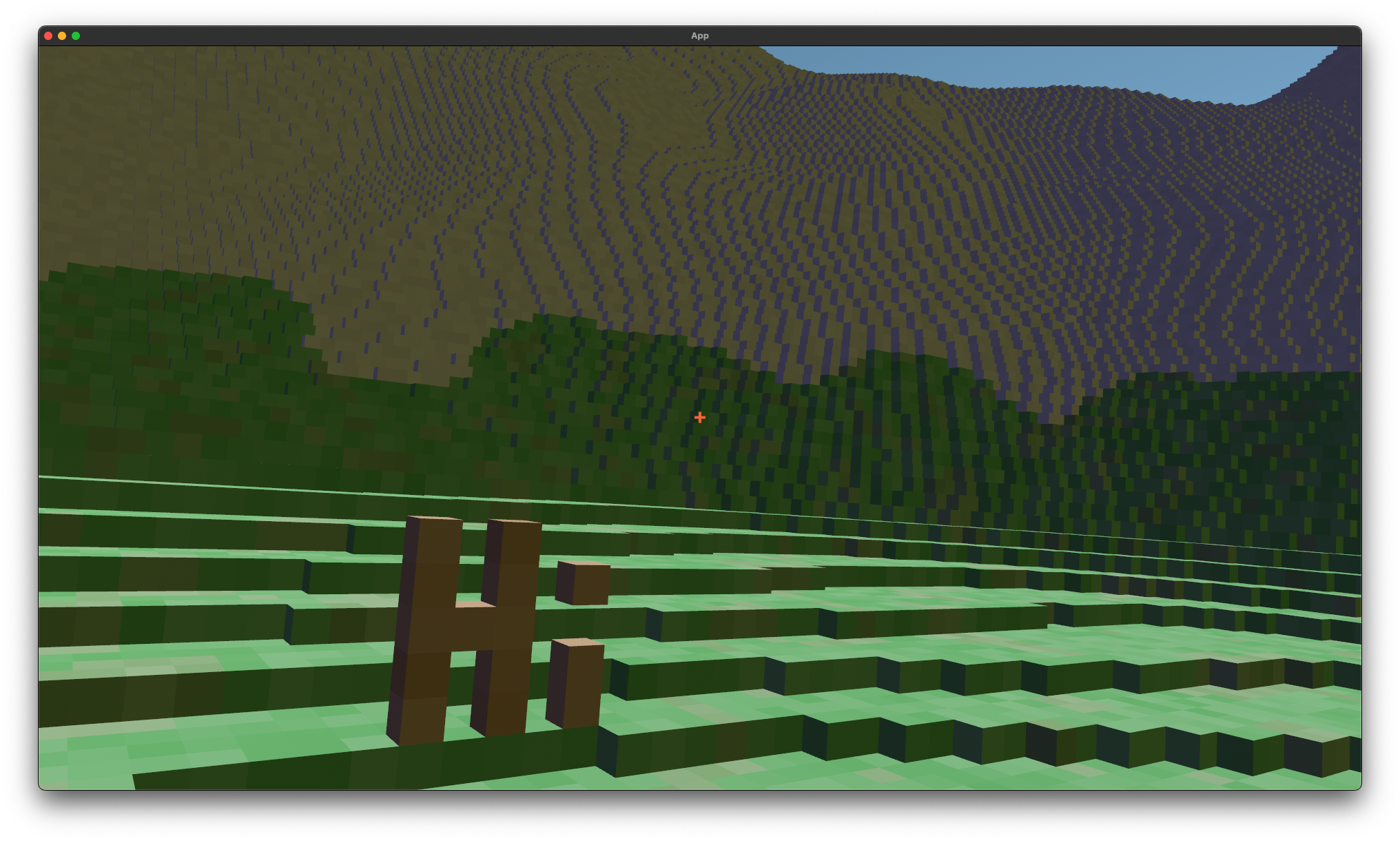

And finally working...

Debug textures to make sure things are working at all...

And finally working...

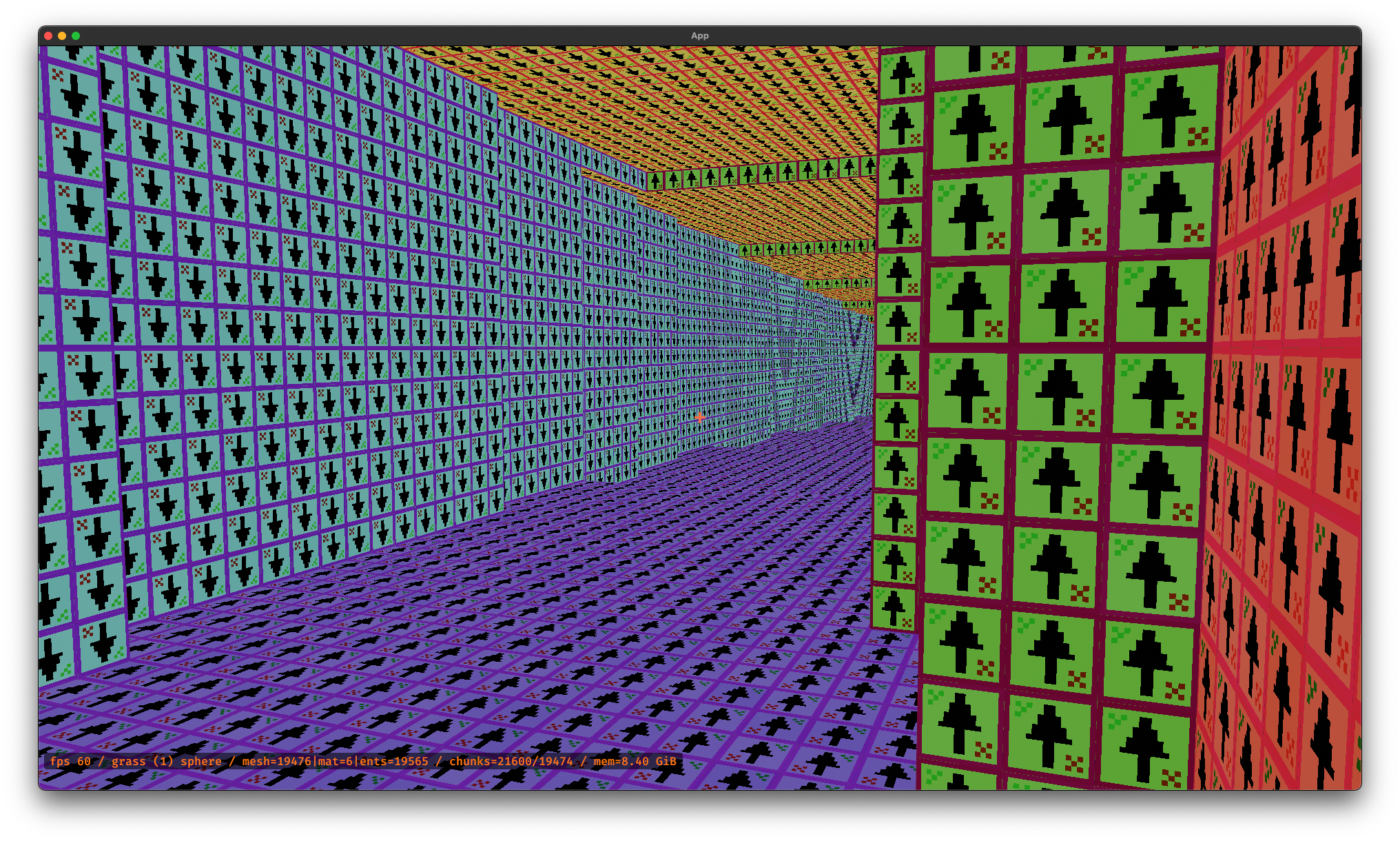

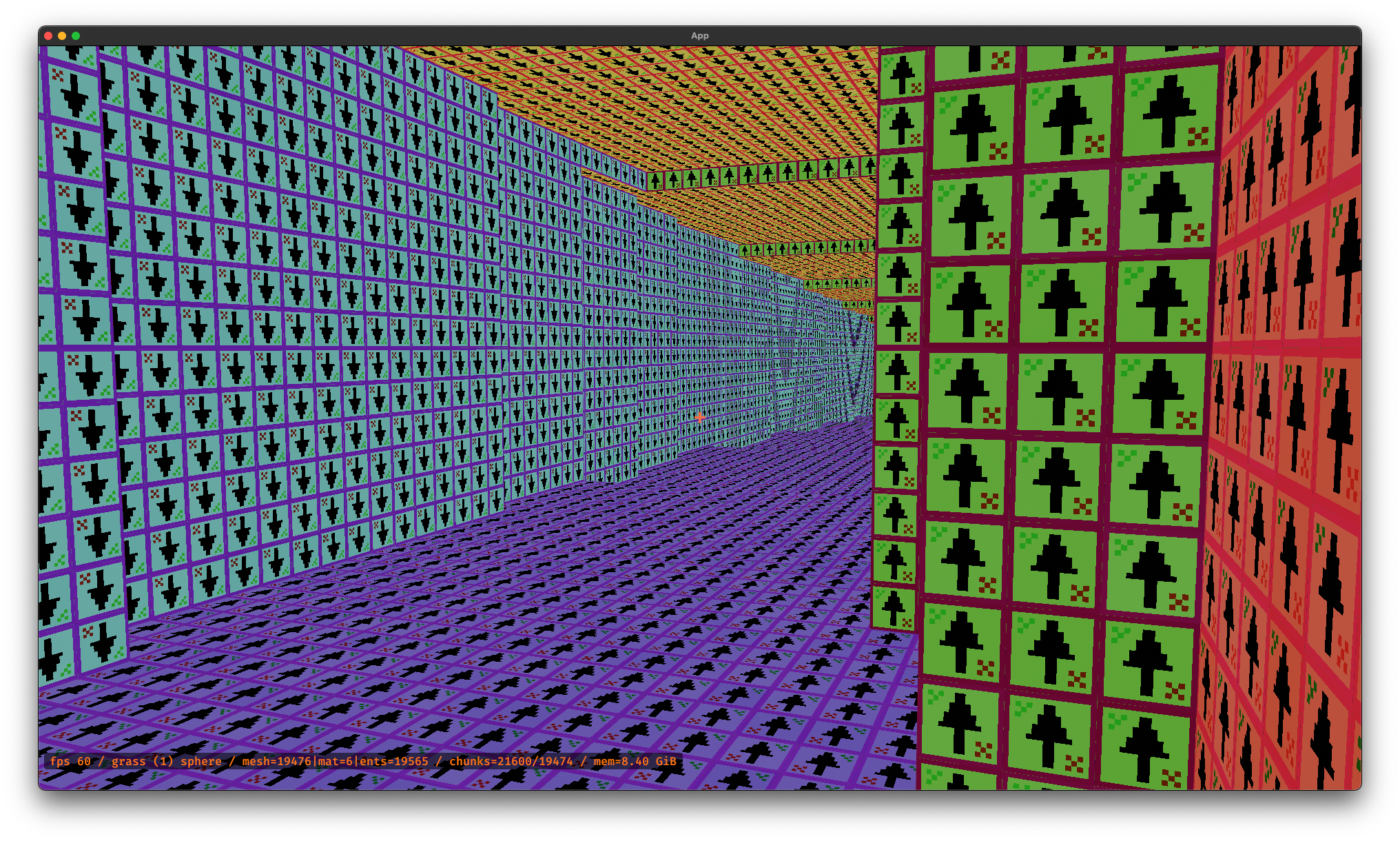

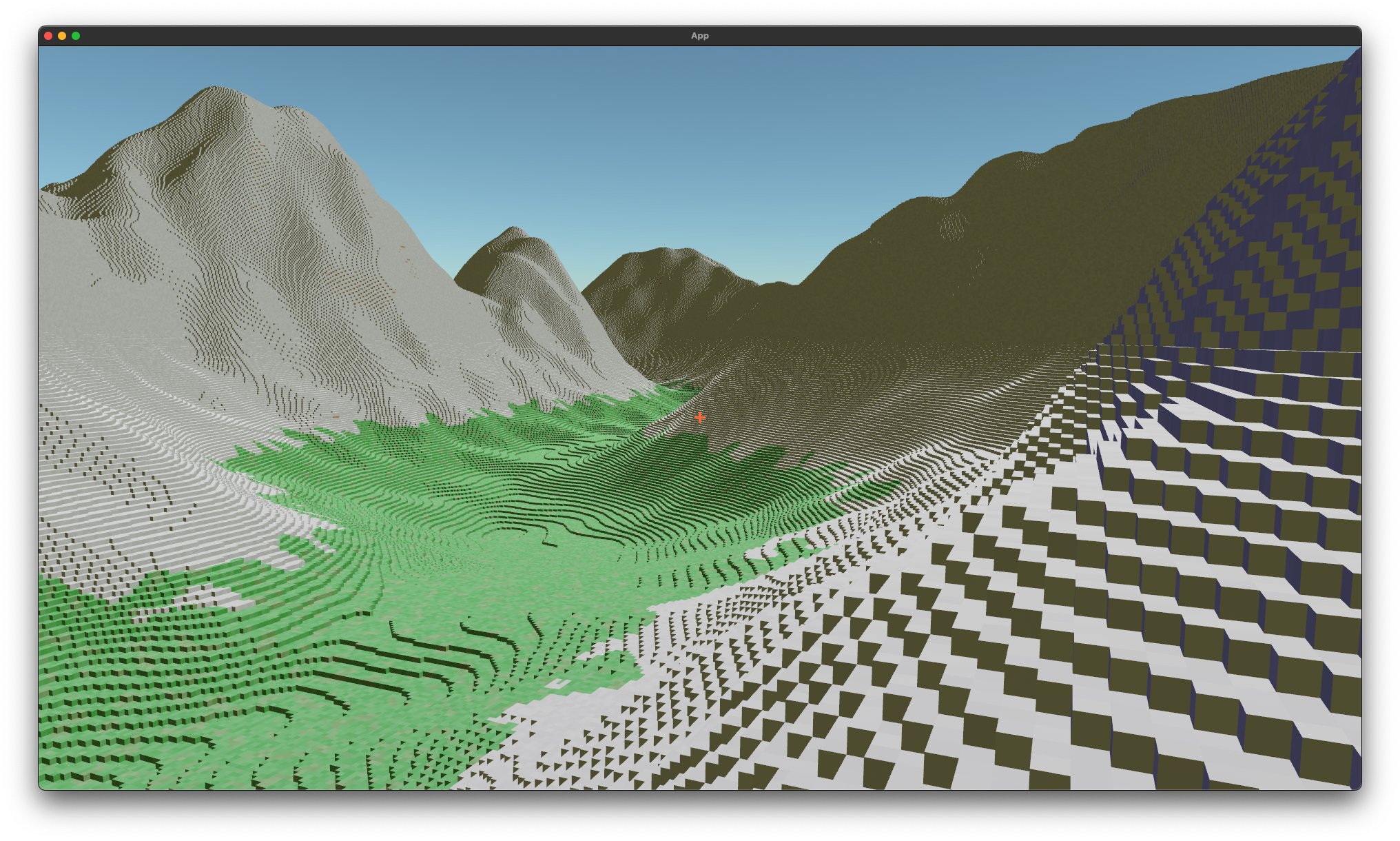

Dug a tunnel into the side of the mountain...

The engine now supports brushes for adding, removing, and painting multiple voxels at once.

Also added a micro heads up display at the bottom so toggling between voxel types and brushes is not fully an exercise in memorization.

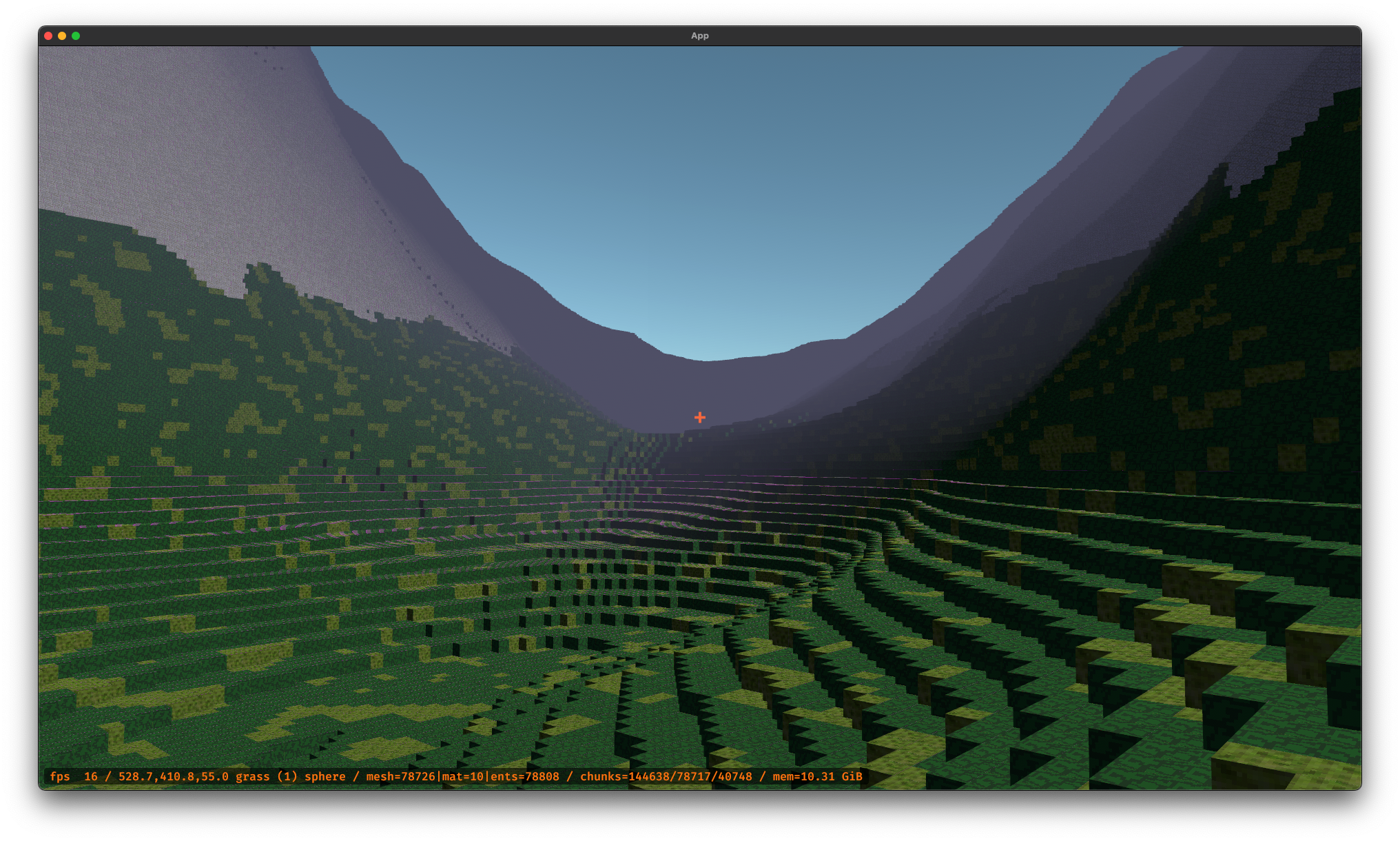

There are still a few bugs and, now with larger persisted worlds, the frames-per-second (fps) is quickly dropping out of the ~60 range. Nonetheless, the engine is feeling more capable.

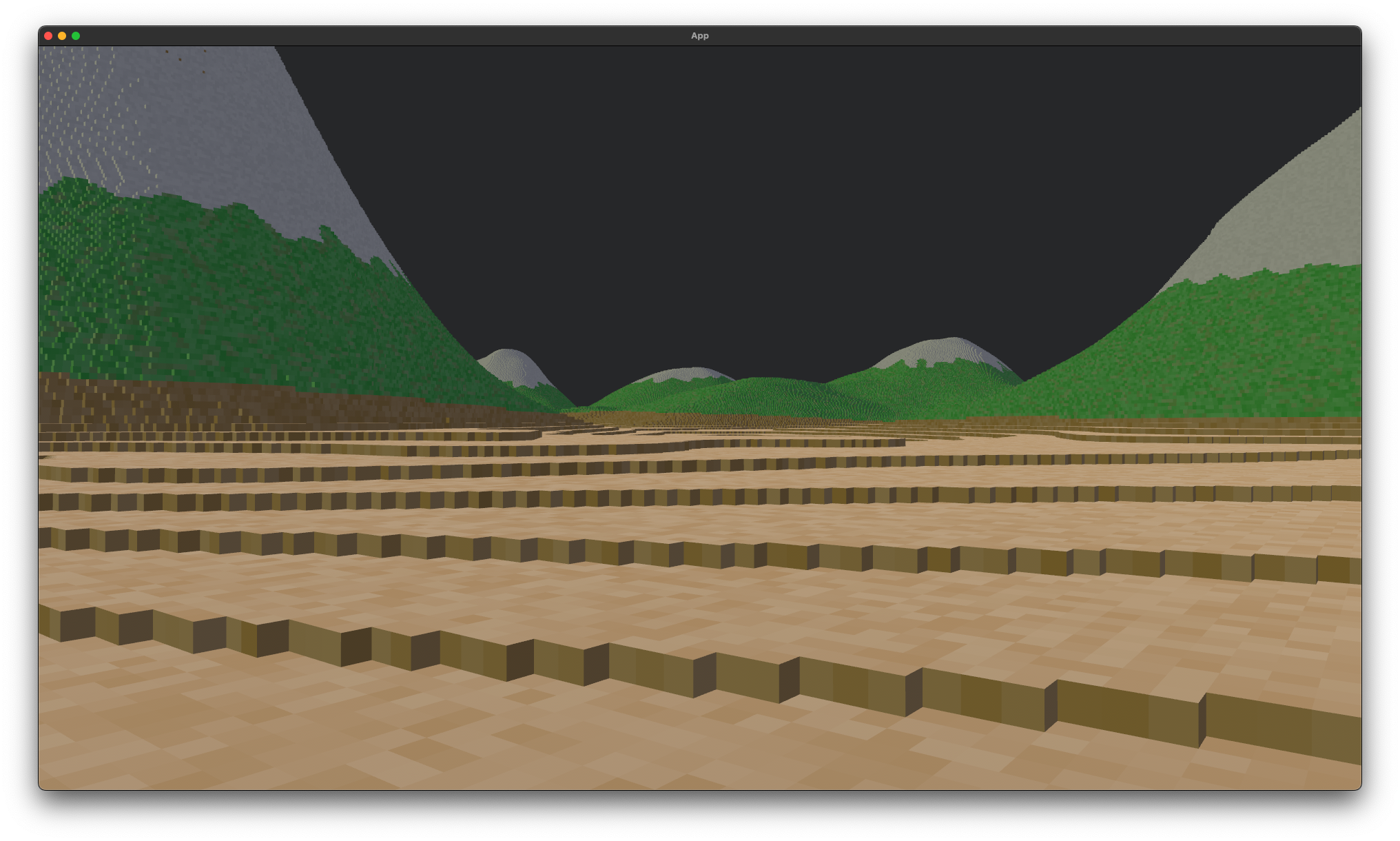

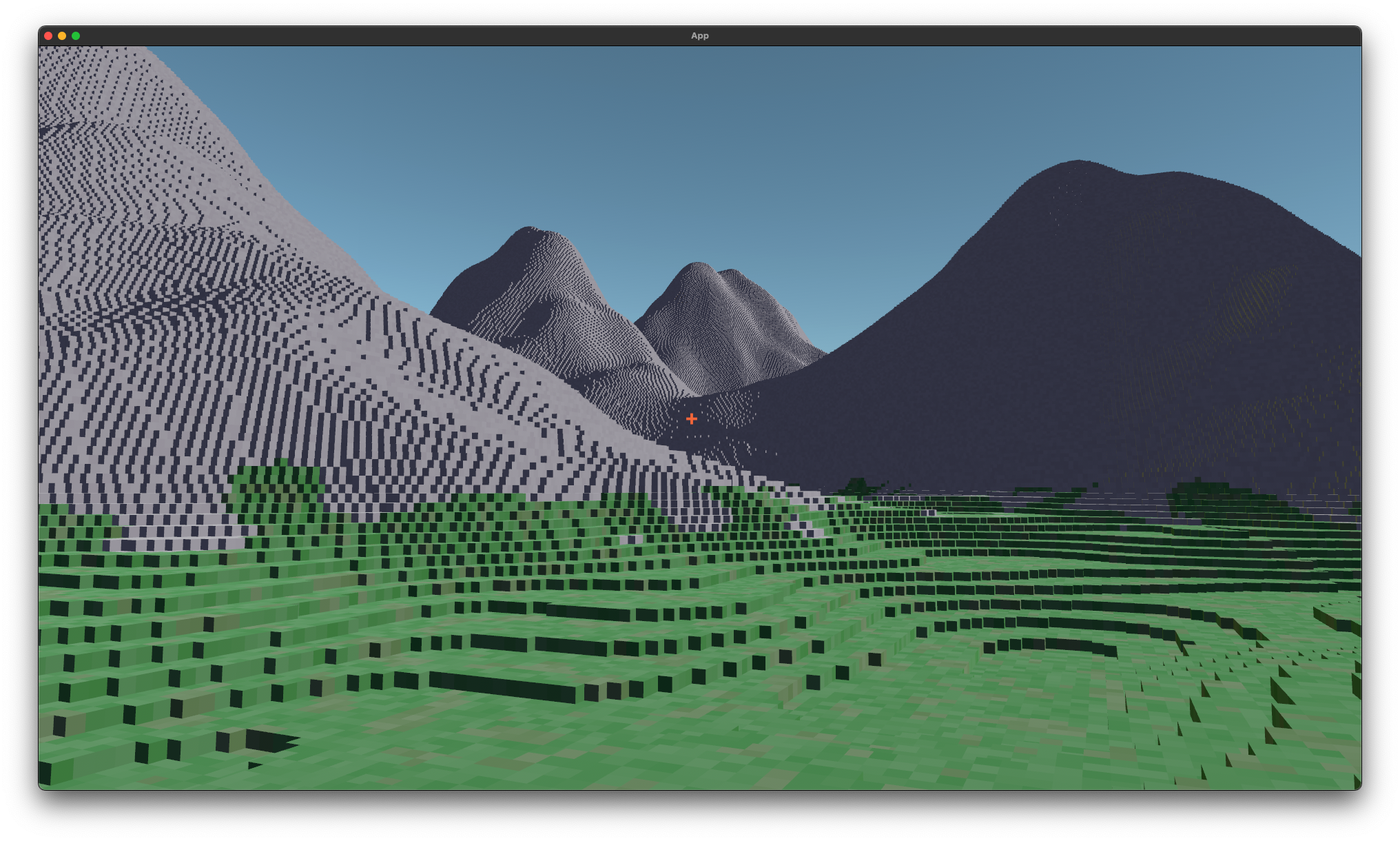

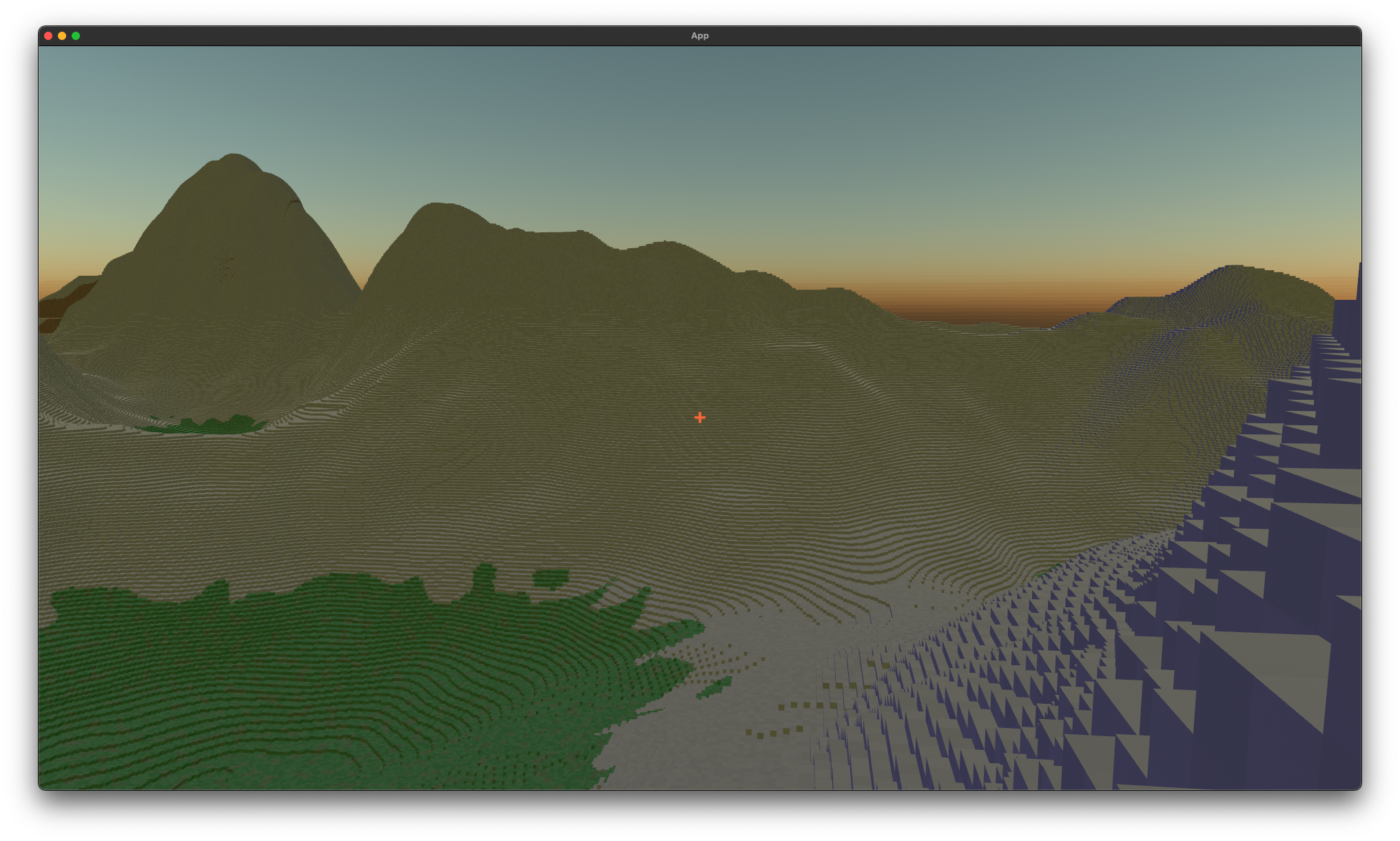

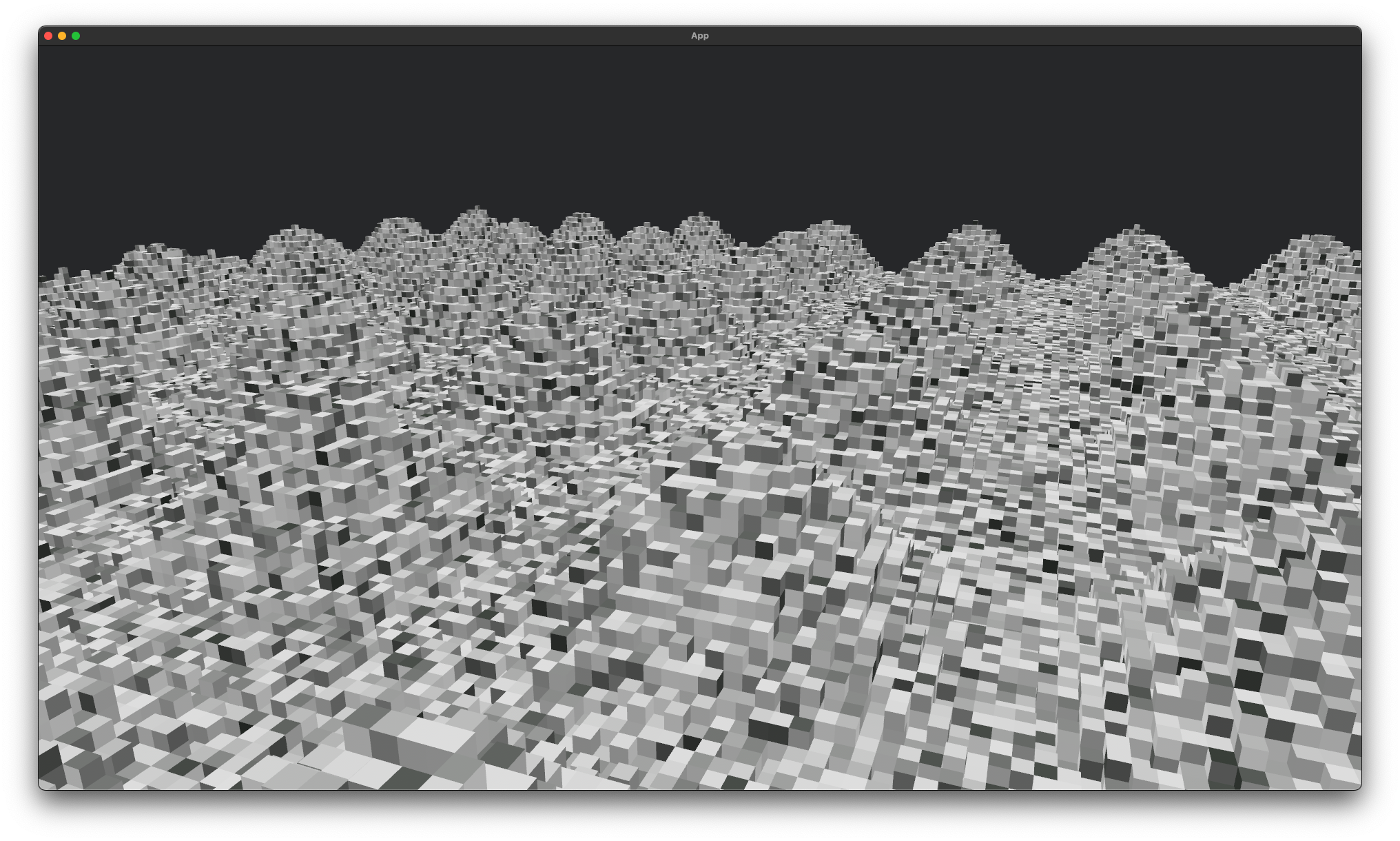

Working a bit on terrain generation. Turns out it can be a lot more fun working on this in native desktop with Rust rather than WebGL given the runtime limitations of the latter.

Still working on voxel rendering in Bevy. Restarted from fresh as I've learned a bit more since last time...

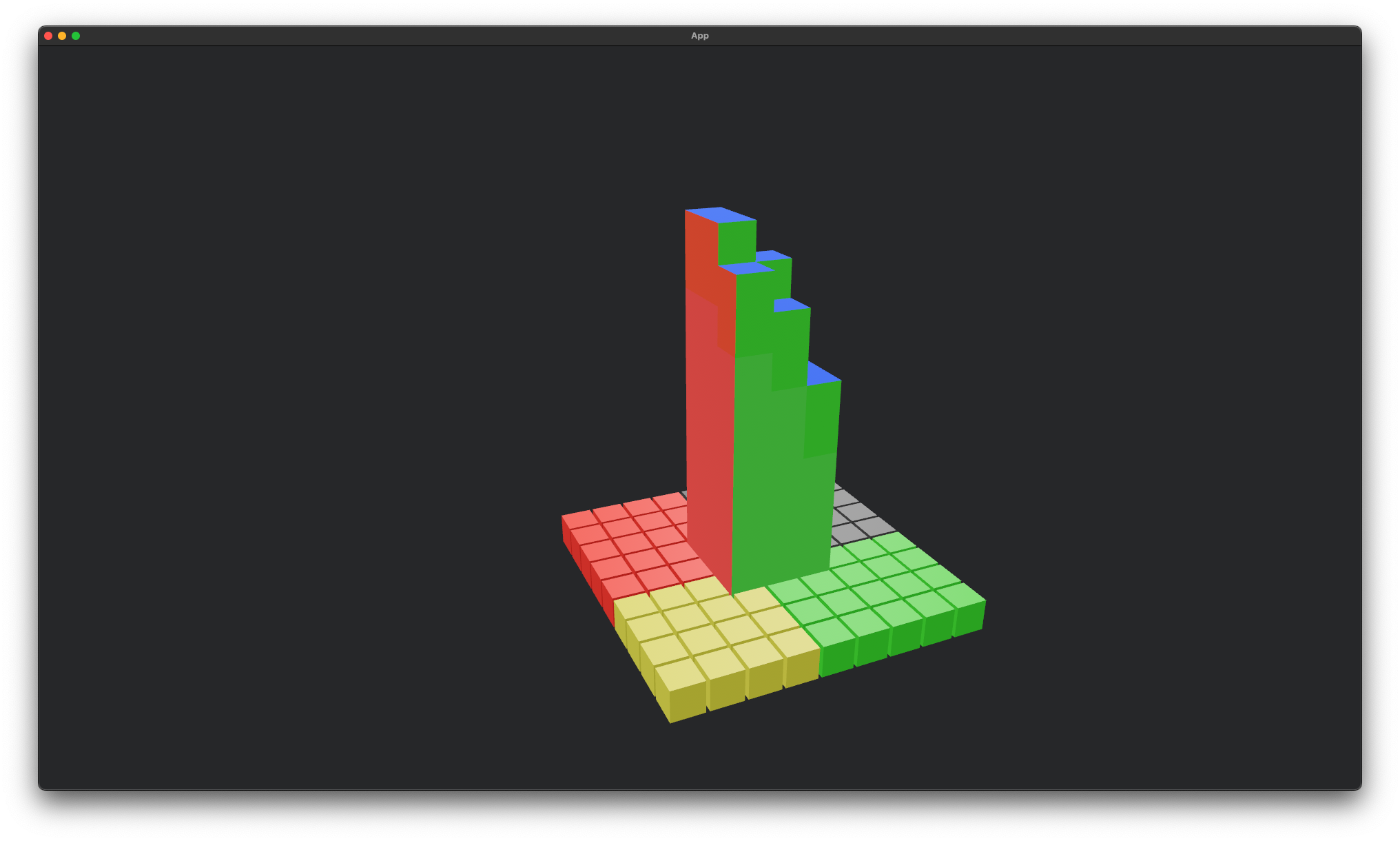

Been working on a lot of different experiments lately. Merging my recent interest in learning Bevy, Rust, and WASM with my long-time interest in voxel rendering, I've been working on an experimental program to convert a Quake 2 BSP38 map file into voxelized representation.

The above is slow and inefficient, but it's an interesting starting point as it's all running in WASM using Bevy.

Rewrote the WGPU render pass for rendering the voxels to use an instance buffer than generates 36 vertices per instance (i.e. vertices for the 6 * 2 = 12 triangles of a voxel).

I'm not sure if this is going to be the right approach, but I wanted to at least experiment with it.

Update: naively swapping the instance-based rendering in for the more traditional triangle renderer was MUCH slower. I'm not confident this is going to be a better rendering method.

The approach used in the above video is fairly naive. Each voxel is given a vec3<f32> position and vec3<f32> color. The buffer itself has one entry for each filled voxel. It is instanced 36 times to generate the full cube for each voxel. So 36 instances and and 6 x 32 = 192 bits = 24 bytes per instance.

Given that each voxel is a cube, this could be optimized to figure out the view direction and draw only the 3 faces that are oriented towards the camera. Thus we could reduce the instancing down to 3 quads = 12 vertices. It should be easy to do this as the shader can read the left or right face, top or bottom, and front or back: it can know which for each of those three by simply looking if the x, y, z of the view direction is positive or negative.

Chunks are currently 32x32x8. Or 2^5 x 2^5 x 2^3. That means each chunk needs only 13-bits to storage the location of a voxel within a chunk. I could send each "instances" as 13-bits plus a vec3 translation for the whole chunk. Let's round that up to a u16 per voxel. I could make the chunks 16x16x8 and limit each chunk to a map of 128 values thus compacting both the position and color into a u16. For to leave room for flexibility, I'll assume a u32 could hold all the per-voxel info in a 32x32x8 chunk. That's 32k per chunk. Given 512MB reserved for chunks, that's 16k chunks. That's 128x128 horizontally assuming a depth of 1. If we assume we need an average chunk depth of 4 (which seems pretty agressive), that's 64x64 chunks. If we assume we load chunks equally in front and behind the player, that's a view distance of 32 chunks. Each chunk has 32 voxels and there are 4 voxels per meter, there's a view distance of 256 meters -- roughly a quarter of a kilometer.

There's also a question of sending chunks where only the visible voxels are sent. This would be more efficient on the surface, as it saves a lot of space for mostly empty chunks. However, it makes modifications more difficult: the WGPU buffers are not resizable and there's not a fixed position-to-index mapping function. It might be nice to support both: optimize a chunk that hasn't been modified for a while and leave recently modified chunks as the full voxel space.